Part 5: Design Patterns – C# / .NET Interview Questions and Answers

This chapter explores design patterns, anti-patterns, and principles that are usually used across development on .NET.

The Answers are split into sections: What 👼 Junior, 🎓 Middle, and 👑 Senior .NET engineers should know about a particular topic.

Also, please take a look at other articles in the series: C# / .NET Interview Questions and Answers

📐 Principles & Anti-Patterns

❓ How do you detect and fix a God Object in a legacy .NET codebase?

A God Object is a class that knows too much or does too much. It ends up being a dumping ground for logic, data, and side effects. In a legacy codebase, they often start as “Manager” or “Helper” classes and quietly grow into untestable monsters.

How to detect God Object:

- The class has over 1,000 lines and continues to grow.

- Too many dependencies injected.

- Deals with business logic, database, and UI formatting—all in one place.

- Unit tests take forever or don’t exist at all.

How to fix it:

- Start with read-only refactoring, adding region markers, method headers, or comments to logically split responsibilities.

- Extract cohesive behaviors into smaller classes by grouping related methods and data.

- Introduce interfaces to isolate dependencies.

- Use composition over inheritance.

- Write tests as you break it up. It’s your safety net.

What .NET engineers should know:

- 👼 Junior: Should understand that bloated classes are a code smell and hurt maintainability.

- 🎓 Middle: Able to identify symptoms of a God Object and apply basic refactoring like Extract Class, Extract Method, and use interfaces to separate concerns.

- 👑 Senior: Should be able to untangle legacy God Objects systematically, create meaningful abstractions, design refactoring plans that minimize risk, and coach the team on best practices to avoid reintroducing them.

📚 Resources:

- Understanding God Objects in Object-Oriented Programming

- The “God Object” Anti-Pattern in Software Architecture.

❓ Explain YAGNI (“You Aren’t Gonna Need It”) with an example from your past projects.

YAGNI is a mindset: “Don’t build for tomorrow’s problem until today needs it.” Developers love future-proofing. But often, that future never comes—and now you’re maintaining dead code.

Real-world example (That for reference, don't use it in your interviews, and think about your example):

In one .NET project, we had a simple CRUD service for managing user roles. A teammate added a full-blown strategy pattern for handling different permission types "just in case" we’d need complex logic later.

Guess what? Three years later—still one permission model. That extra complexity just slowed down onboarding and testing.

What .NET engineers should know about YAGNI:

- 👼 Junior: Should know what YAGNI means and avoid it where possible.

- 🎓 Middle: Should recognize overcomplexity and keep code focused on current requirements, not “just-in-case” logic.

- 👑 Senior: Should actively avoid overengineering in code reviews and architectural decisions. Being able to justify when not applying patterns is often the smarter call.

📚 Resources: YAGNI – Martin Fowler

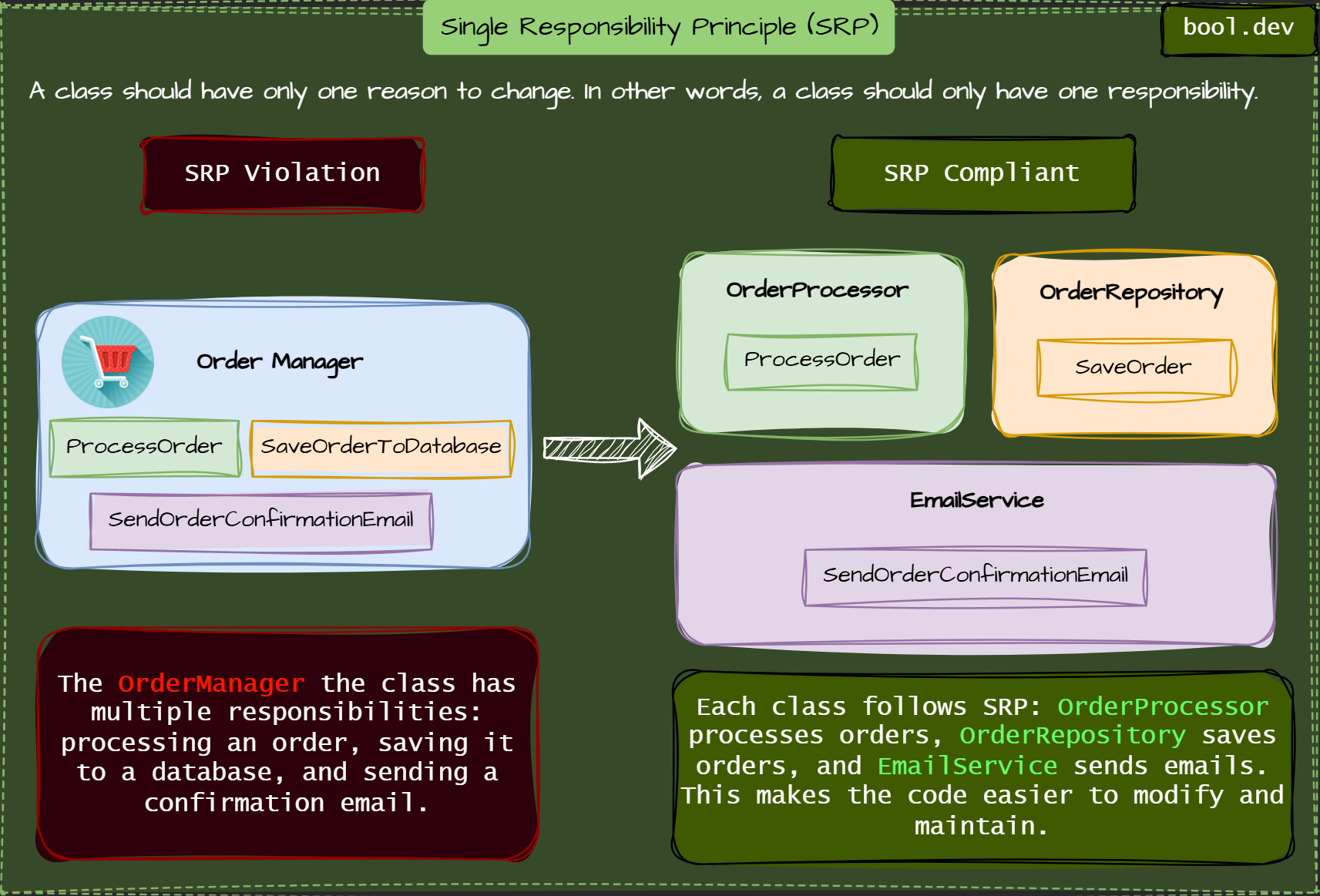

❓ What’s the difference between DRY and the Single Responsibility Principle, and when might they conflict?

- DRY (Don't Repeat Yourself) is about avoiding code duplication.

- The Single Responsibility Principle (SRP) is about ensuring that each class or module performs only one job.

They are both about writing better code, but in different ways:

- DRY helps you keep your logic in one place.

- SRP enables you to keep your classes focused.

What .NET engineers should know:

- 👼 Junior: Should understand that DRY reduces repeated code, and SRP helps keep code organized by doing one thing per class.

- 🎓 Middle: Should know that too much reuse can lead to classes with too many responsibilities. Can decide when it’s better to keep code separate.

- 👑 Senior: Should be able to explain trade-offs between DRY and SRP. Can spot when shared code needs to be split to keep responsibilities clear.

📚 Resources:

❓ How can Low Coupling and High Cohesion from GRASP be measured or evaluated in a C# class design?

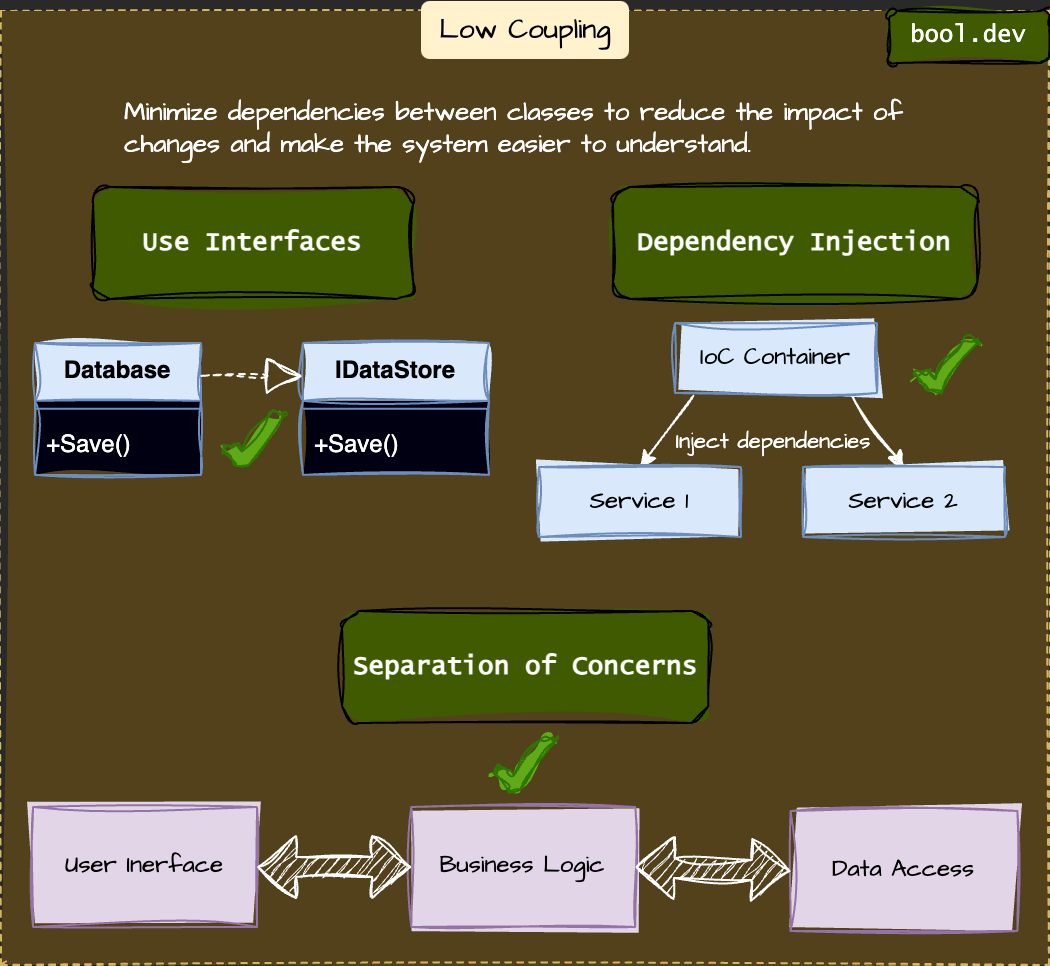

Low Coupling

Minimize dependencies between classes to reduce the impact of changes and make the system more understandable.

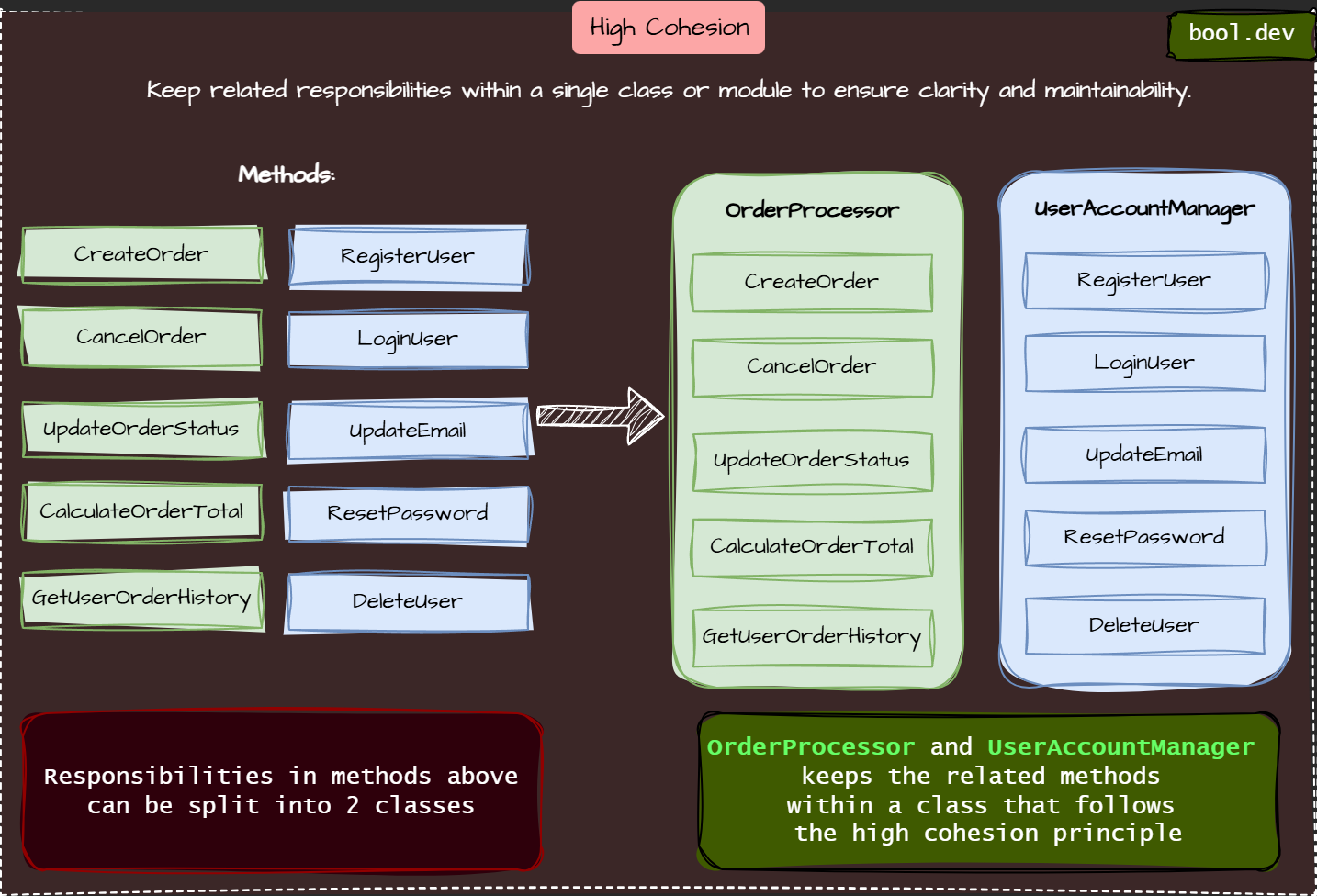

High Cohesion

Keep related responsibilities within a single class or module to ensure clarity and maintainability.

You can evaluate them by asking:

- Does this class need to be aware of many other classes to perform its job effectively? (low coupling = good)

- Are its methods and fields tightly related to one responsibility? (high cohesion = good)

Signs of high coupling:

- Too many constructor parameters

- Many external method calls (

otherService.DoStuff()) - Hard to write unit tests without mocking half the app

Signs of low cohesion:

- Class name is vague (e.g.

Utils,Manager) - Methods deal with unrelated logic (e.g., DB + UI + business logic)

- You keep scrolling and can’t remember what the class was about

What .NET engineers should know:

- 👼 Junior: Should understand the idea that classes should do one thing and depend on as little as possible.

- 🎓 Middle: Should spot highly coupled or low cohesion classes in code reviews, and refactor toward better separation of concerns.

- 👑 Senior: Should design systems where cohesion and coupling are considered from the start. Uses metrics and experience to guide team design choices.

📚 Resources: GRASP Principles: General Responsibility Assignment Software Patterns

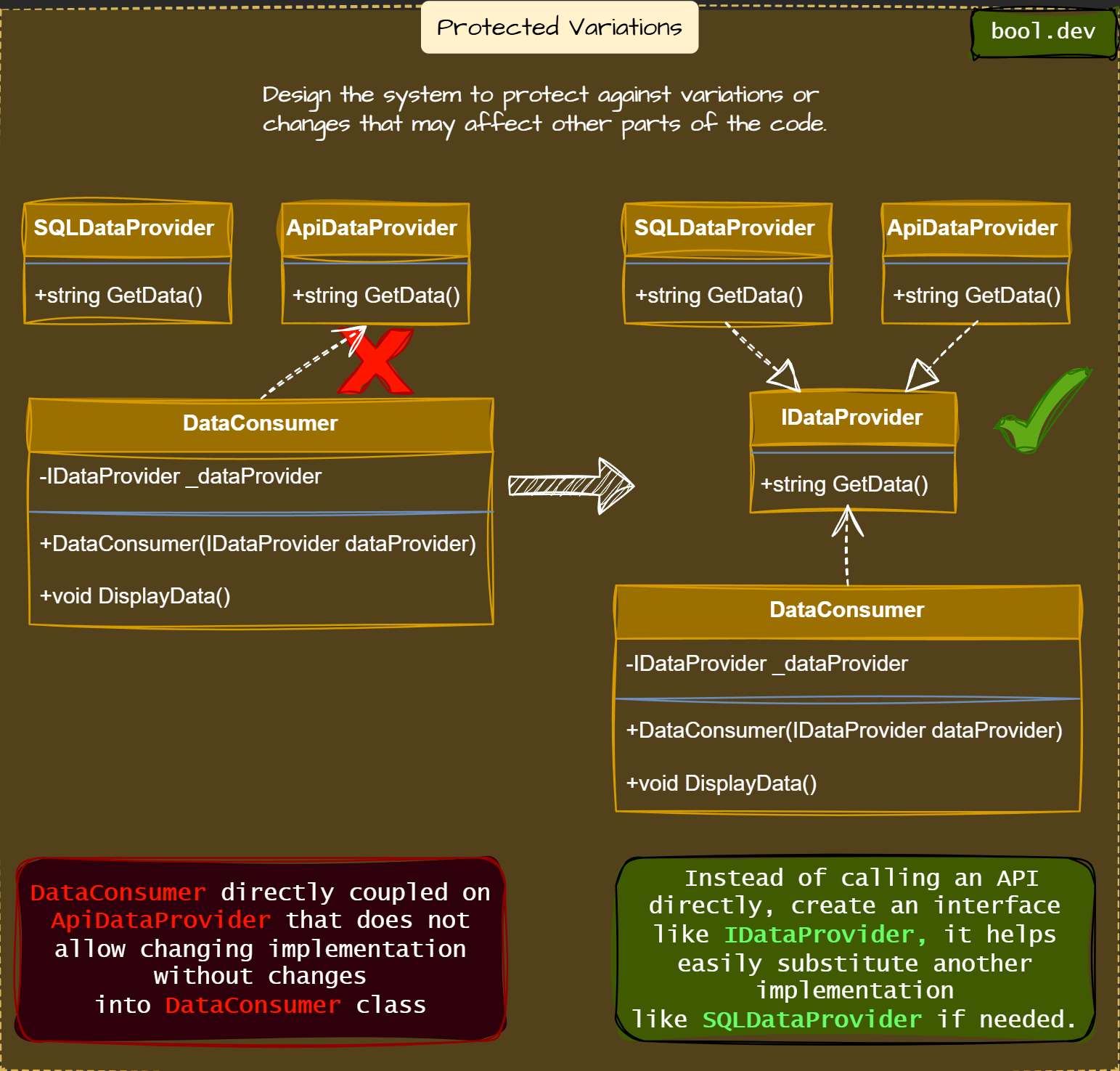

❓ How do Protected Variations and Pure Fabrication support extensible and decoupled architectures?

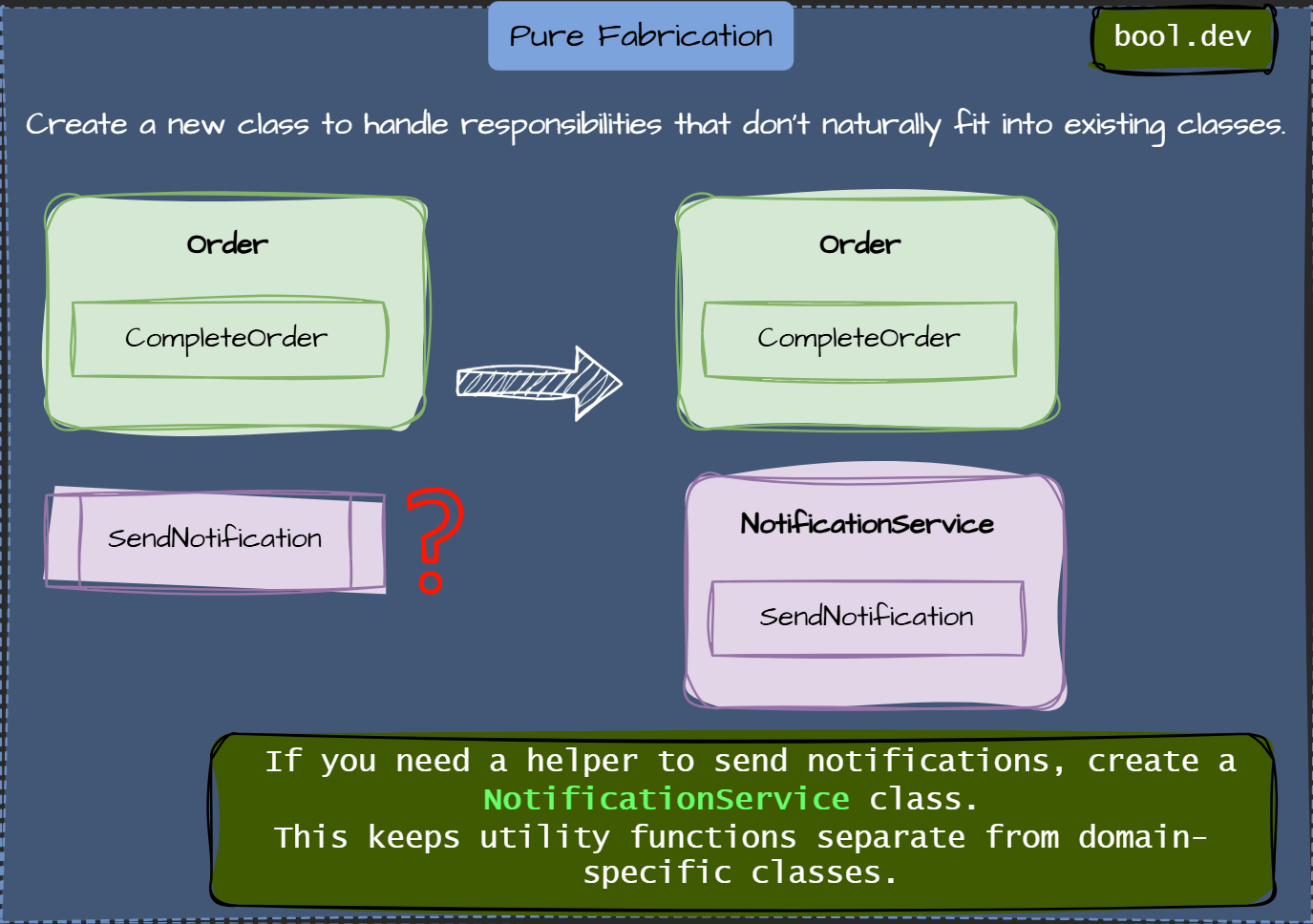

Pure Fabrication

Protected Variations

Together, Pure Fabrication and Protected Variations are:

- Decouple implementation details from consumers

- Make future changes (like switching libraries or tools) less painful

- Help with testing by replacing dependencies with mocks/stubs

What .NET engineers should know:

- 👼 Junior: Should understand why using interfaces and helper classes (like repositories or services) improves code flexibility.

- 🎓 Middle: Should apply Protected Variations to isolate volatile code, and use Pure Fabrication patterns to organize logic that doesn’t fit domain models.

- 👑 Senior: Should design systems with clear extension points and clean abstractions. Able to balance introducing fabricated classes without overengineering.

📚 Resources: GRASP Principles: General Responsibility Assignment Software Patterns

❓ Describe an instance of the Service Locator anti-pattern, and how you would convert it to Dependency Injection.

The Service Locator hides dependencies by retrieving them from a container within the class. This makes the code harder to understand and test, because you can’t easily tell what the class depends on.

Example of Service Locator:

public class OrderProcessor

{

public void Process()

{

var emailService = ServiceLocator.Get<IEmailService>();

emailService.SendConfirmation();

}

}

Here, OrderProcessor depends on IEmailService, but this dependency is not immediately apparent. Also, you can't inject a mock in a unit test without a real container.

Refactored with Dependency Injection:

public class OrderProcessor

{

private readonly IEmailService _emailService;

public OrderProcessor(IEmailService emailService)

{

_emailService = emailService;

}

public void Process()

{

_emailService.SendConfirmation();

}

}Now that the dependency is explicit, it becomes easy to test and maintain. The class doesn’t pull dependencies; it gets them handed in.

When Service Locator makes sense:

One everyday use case is within a factory or plugin system, where you want to resolve implementations dynamically based on runtime data, and you don’t want to inject every possible option upfront.

Example: background job processor that resolves handlers by job type

public class JobExecutor

{

private readonly IServiceProvider _serviceProvider;

public JobExecutor(IServiceProvider serviceProvider)

{

_serviceProvider = serviceProvider;

}

public void Execute(string jobType)

{

Type handlerType = Type.GetType($"MyApp.Jobs.{jobType}Handler");

var handler = (IJobHandler)_serviceProvider.GetRequiredService(handlerType);

handler.Handle();

}

}Here, you’re resolving a handler by string name, and you don’t know in advance which one you’ll need. Injecting every handler into JobExecutor wouldn't scale well.

This usage is acceptable because:

- The locator is isolated in a single location.

- The consumer (

JobExecutor) doesn’t hide its dependency onIServiceProvider. - It’s at the composition boundary, not scattered throughout business logic.

What .NET engineers should know:

- 👼 Junior: Should understand why hidden dependencies are bad and how constructor injection improves clarity.

- 🎓 Middle: Should avoid Service Locator in application code, and be comfortable injecting dependencies using built-in or third-party DI containers.

- 👑 Senior: Should enforce DI as part of design decisions. Can explain when a Service Locator is harmful and guide refactoring toward clear dependency management.

📚 Resources:

- Inversion of Control Containers and the Dependency Injection pattern

- .NET dependency injection

- Service Locator is not an Anti-Pattern

❓ What code smells indicate GRASP principle violations, and how did you refactor them?

GRASP principles help guide solid object-oriented design. When they're violated, you often see code smells—signals that something's off in structure or responsibility.

Here are some familiar smells and what they might tell you:

- 1. God Class

Violates: High Cohesion, Controller

A huge class that tries to do everything—UI, logic, data access.

Refactor: Extract smaller classes that each perform a single task. Apply SRP + delegate responsibilities (e.g., createOrderValidator,OrderRepository,OrderPresenter). - Feature Envy

Violates: Information Expert

A method accesses too much data from another class.

Refactor: Move the logic closer to where the data lives. Let the class that owns the data be responsible for behavior, too. - Switch/if chains on types.

Violates: Polymorphism, Low Coupling.

Too manyiforswitchstatements based on type names or enums.

Refactor: Replace with polymorphism using the strategy pattern or interface dispatch. Let each type decide what to do instead of manually checking the types. - Many-to-Many Dependencies

Violates: Low Coupling.

Lots of classes depend directly on each other.

Refactor: Utilize interfaces, events, or inversion of control (IoC) to decouple the dependencies between components. - Vague or mixed-purpose classes

Violates: High Cohesion, Pure Fabrication

Classes likeUtils,Helpers, orManagerThat group of unrelated stuff together.

Refactor: Apply Pure Fabrication and create purpose-driven classes (e.g.,InvoiceFormatter,DateParser,EmailSender), even if they don’t represent real-world concepts.

What .NET engineers should know:

- 👼 Junior: Should recognize code smells like big classes or too many

ifstatements and recognize them as potential signs of design problems. - 🎓 Middle: Should connect code smells to GRASP principles and know common refactorings like Extract Class, Move Method, or Replace Conditionals with Polymorphism.

- 👑 Senior: Should spot deeper architectural violations, lead refactoring efforts at system scale, and guide teams on writing GRASP-aligned, maintainable code from the start.

📚 Resources: GRASP Principles: General Responsibility Assignment Software Patterns

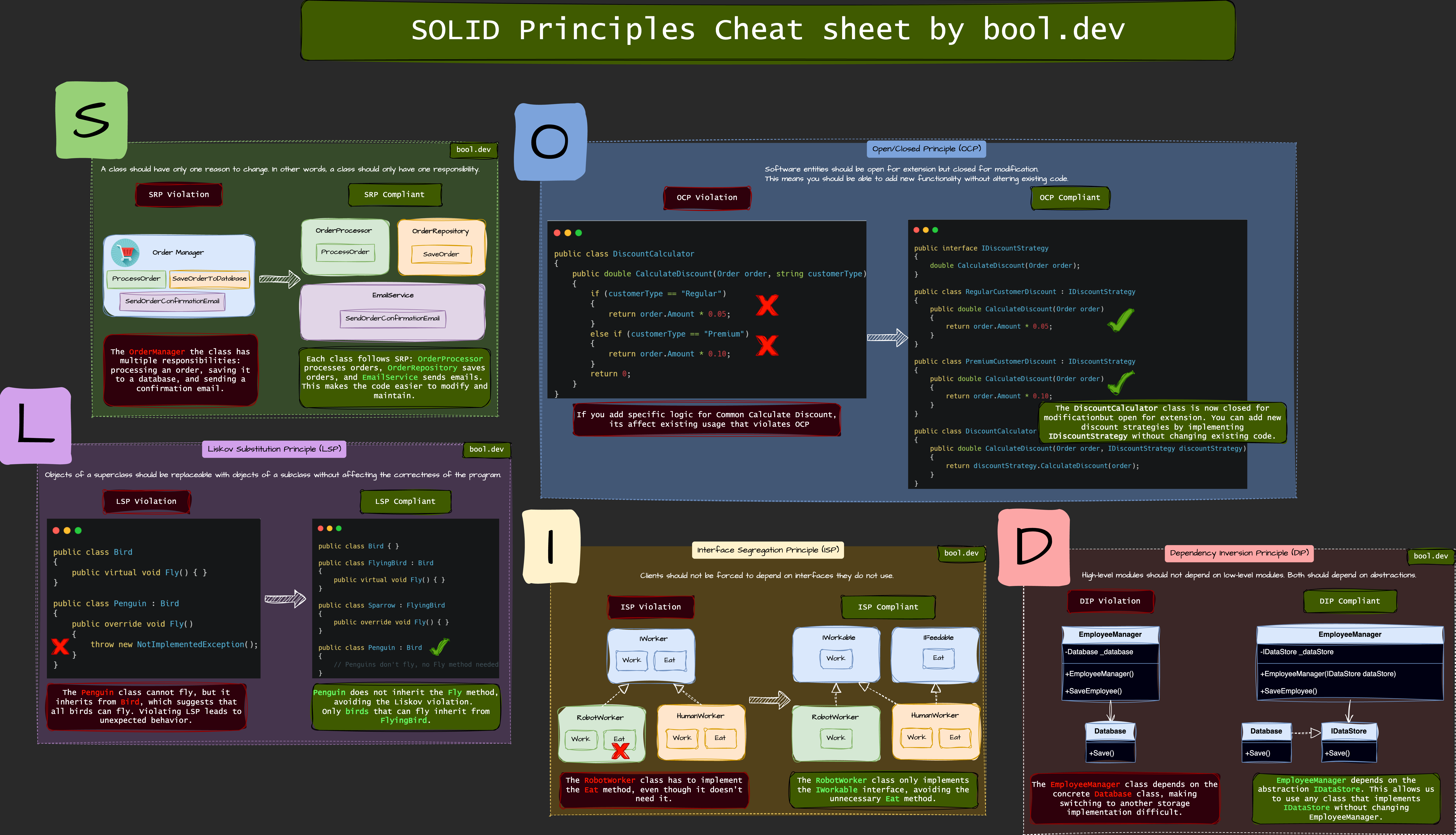

❓ Can you explain what the SOLID principles are in practice?

- S – Single Responsibility Principle

A class should have only one reason to change. For instance, if a class is responsible for both saving data to a file and validating input, it has taken on two responsibilities. It's better to separate these functions into different classes. - O – Open/Closed Principle

Classes should be open for extension but closed for modification. Rather than altering an existing class to add new behavior, you can extend it through inheritance or use composition. - L – Liskov Substitution Principle

Subtypes should behave like their base types. For example, if you have aBirdclass with aFly()method, creating aPenguinsubclass that cannot fly would violate this principle. Such scenarios indicate that the inheritance model needs reevaluation. - I – Interface Segregation Principle

It's better to have multiple small interfaces rather than one large interface. If yourIShapeinterface includes methods likeDraw(),Resize(), andRotate(), not every shape will require all of these methods. By breaking them into smaller interfaces, you prevent forcing unnecessary implementations on specific classes. - D – Dependency Inversion Principle

High-level modules should depend on abstractions rather than concrete classes. For example, instead of instantiating aSmtpClient()directly inside a service, you should inject anIEmailSender. This approach enhances code flexibility and makes it easier to test.

See our SOLID Cheat Sheet to Learn more:

What .NET engineers should know:

- 👼 Junior: Should understand what each SOLID principle means and be able to identify simple examples in code.

- 🎓 Middle: Should apply SOLID in day-to-day development, especially when designing classes or reviewing code.

- 👑 Senior: Should design architecture that reflects SOLID principles, while balancing them with readability and real-world constraints.

📚 Resources:

❓ How do you balance the Open/Closed Principle with frequent business changes in agile teams?

The Open/Closed Principle (OCP) states that your code should be open for extension but closed for modification. In theory, this sounds great. However, in agile teams, requirements often change rapidly and frequently. Attempting to “future-proof” everything can lead to unnecessary abstractions and over-engineering.

Practical Ways to Balance OCP:

- Avoid Over-Abstraction Early: Hold off on abstracting components until at least two use cases have emerged. This helps prevent premature complexity.

- Use Composition and Interfaces: Isolate areas of your code that are likely to change by using interfaces or strategy patterns. This allows for flexibility in the event of changes.

- Apply OCP Selectively: Implement the Open/Closed Principle only where the code is already changing. There's no need to generalize stable code.

- Evolve your code’s design over time rather than attempting to overhaul it all at once.

Example:

Consider a ReportGenerator that only exports to PDF. Resist the urge to create an IExportStrategy interface until a real need for exporting to CSV or Excel arises. At that point, you can refactor the code accordingly.

What .NET engineers should know:

- 👼 Junior: Should understand that OCP means not rewriting stable code every time something changes.

- 🎓 Middle: Should recognize when to extend behavior using interfaces, but avoid building abstractions too early.

- 👑 Senior: Should coach the team on when OCP is useful and when it adds complexity. Knows how to evolve code over time using patterns like Strategy, Decorator, or composition.

📚 Resources:

❓ What’s the difference between an Anemic Domain Model and a strong Rich Domain Model? How can you prevent accidentally using the weaker one?

- An anemic domain model occurs when your domain classes are merely data containers with no behavior or logic. All the business logic resides elsewhere, typically in services.

- A rich domain model keeps both data and behavior together, modeling real-world concepts directly in code.

How to detect an anemic model:

public class Invoice

{

public decimal Amount { get; set; }

public DateTime DueDate { get; set; }

}

// In a service

public void MarkAsPaid(Invoice invoice) { ... }

The class has no logic; it can’t protect its state.

Rich model version:

public class Invoice

{

public decimal Amount { get; private set; }

public DateTime DueDate { get; private set; }

public bool IsPaid { get; private set; }

public void MarkAsPaid()

{

if (IsPaid)

throw new InvalidOperationException("Already paid.");

IsPaid = true;

}

}Now the domain enforces its own rules. This is easier to test and reason about.

How to avoid slipping into an anemic model:

- Use Encapsulation: make setters private or remove them.

- Move business rules into the domain object itself.

- Avoid naming services like

XManager,XProcessor, orXServicethat manipulates dumb objects.

Apply DDD principles where appropriate, even in small projects.

What .NET engineers should know:

- 👼 Junior: Should understand that domain classes can (and should) include behavior, not just data.

- 🎓 Middle: Should recognize signs of anemic models and know how to move logic into domain objects to keep them cohesive.

- 👑 Senior: Should model business concepts clearly in code. Leads design decisions that encourage rich, intention-revealing models and avoid procedural, service-heavy design.

📚 Resources:

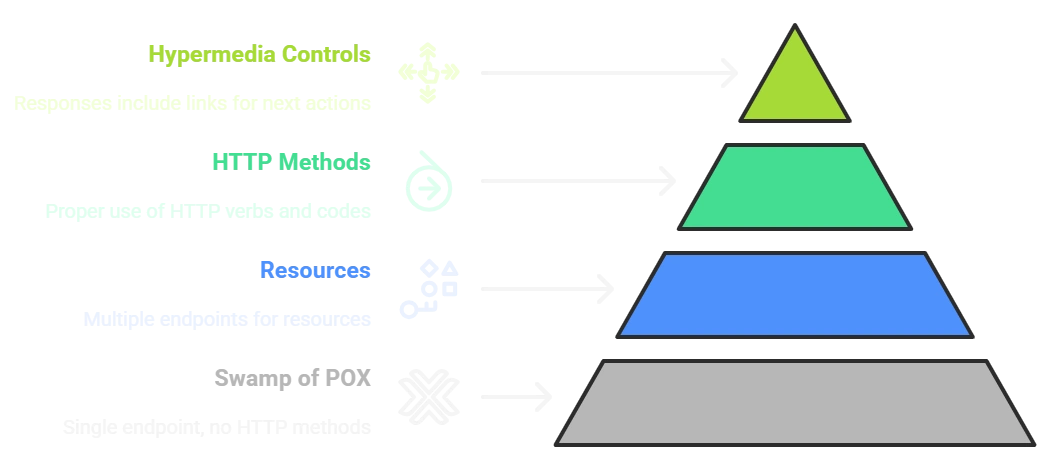

❓ Can you explain the Richardson Maturity Model and how to evaluate the maturity of your APIs

The Richardson Maturity Model (RMM) helps evaluate how "RESTful" your API is. It categorizes REST into four levels of maturity, ranging from basic HTTP usage to full hypermedia.

Level 0 – The Swamp of POX

- Single endpoint (

/api/doStuff) using only POST, often with JSON or XML payloads.

No use of resources, HTTP methods, or status codes.

Level 1 – Resources

- Introduces multiple endpoints (

/users,/orders,/products) that represent actual resources.

Level 2 – HTTP Methods

- Uses status codes (200, 404, 400) to describe results.

- Uses HTTP verbs properly:

- GET for reads

- POST for create

- PUT/PATCH for update

- DELETE for delete

Level 3 – Hypermedia Controls (HATEOAS)

- Responses include links that inform the client of their next steps.

Example: a response from/orders/123might include a link toCancelorPay.

Rarely used in practice—but it's the "pure REST" level.

How to evaluate your API:

- Does it have multiple resource-based URLs? 👉 Level 1

- Does it use proper HTTP verbs and status codes? 👉 Level 2

- Does it include links to actions in responses? 👉 Level 3

Most modern APIs aim for Level 2. Level 3 is great for discoverability, but adds complexity and is often overkill unless you're building hypermedia clients.

What .NET engineers should know:

👼 Junior: Should understand what HTTP verbs are and how REST APIs use different endpoints for different resources.

🎓 Middle: Should design APIs that follow Level 2 practices, using verbs, status codes, and clear resource paths.

👑 Senior: Should evaluate when (or if) to use hypermedia. Able to define API maturity level, spot REST violations, and balance RESTful design with practical client needs.

📚 Resources:

🏗️ GoF Patterns

❓ How do you ensure thread safety in a Singleton implementation without compromising performance?

In .NET, the safest and most performant way to implement a Singleton is to use the lazy initialization pattern with the help of the Lazy<T> type or a static constructor.

Option 1: Using Lazy<T>

public class MySingleton

{

private static readonly Lazy<MySingleton> _instance =

new(() => new MySingleton());

public static MySingleton Instance => _instance.Value;

private MySingleton() { }

}Option 2: Using DI + Singleton Lifetime

public interface IMyService

{

void DoWork();

}

public class MyService : IMyService

{

public void DoWork() => Console.WriteLine("Running");

}

// Program.cs

builder.Services.AddSingleton<IMyService, MyService>();What .NET engineers should know:

- 👼 Junior: Should understand the goal of a Singleton and know static +

Lazy<T>are thread-safe ways to do it. - 🎓 Middle: Should know when to use

Lazy<T>vs static init, and how to register a Singleton using DI for a cleaner, more flexible design. - 👑 Senior: Should prefer DI-driven Singletons in application code. Avoids static states unless necessary and ensures proper lifetime management throughout the app.

📚 Resources:

❓ Explain why Object Pool is helpful in .NET, and how to implement it.

Object Pooling helps you reuse objects that are expensive to create or allocate, like buffers, StringBuilder, HttpClient or custom data processors.

Instead of creating a new object each time, you "borrow" one from a pool, use it, and return it. This reduces memory pressure and improves performance, especially in high-throughput systems.

When it's useful:

- You're allocating the same type of object many times per request.

- Objects are large or expensive to initialize

- The object can be reused safely (e.g., after being reset)

How to implement it in .NET:

.NET provides Microsoft.Extensions.ObjectPool, which includes ObjectPool<T> and built-in policies.

var provider = new DefaultObjectPoolProvider();

var pool = provider.CreateStringBuilderPool();

var sb = pool.Get();

sb.Append("Hello, ");

sb.Append("world!");

Console.WriteLine(sb.ToString());

sb.Clear(); // Clean before returning

pool.Return(sb);

Custom object pool:

public class MyObject { }

public class MyObjectPolicy : PooledObjectPolicy<MyObject>

{

public override MyObject Create() => new MyObject();

public override bool Return(MyObject obj) => true; // Always return it

}

var pool = new DefaultObjectPool<MyObject>(new MyObjectPolicy());Tips:

- Always reset objects before returning them (e.g., clear buffers)

- Don’t use pooling for objects with external state or heavy side effects

- Consider built-in alternatives (like

IHttpClientFactoryforHttpClient) before building your own

What .NET engineers should know:

- 👼 Junior: Should understand the goal of object pooling and recognize where reusing objects is better than creating new ones.

- 🎓 Middle: Should use

ObjectPool<T>in performance-sensitive areas and know how to reset and return objects safely. - 👑 Senior: Should assess when pooling is genuinely beneficial, write custom policies, and guide the team in using pooling without introducing bugs from shared state.

📚 Resources: Object reuse with ObjectPool in ASP.NET Core

❓ How do Decorator and Adapter interact in layered .NET microservices?

In layered .NET microservices, Decorator and Adapter patterns often work side-by-side, but they serve different purposes:

- A decorator adds behavior around an existing component without modifying its code.

- An adapter makes one interface appear to be another, allowing it to plug into a system.

In practice, an adapter is proper between layers or systems with different contracts.

Example: adapting an external API client to your domain interface.

// External SDK

public class LegacyAuthClient {

public string LoginUser(string email, string pass) { ... }

}

// Adapter

public class AuthAdapter : IAuthService {

private readonly LegacyAuthClient _client;

public AuthAdapter(LegacyAuthClient client) => _client = client;

public bool Login(string email, string password) =>

_client.LoginUser(email, password) == "OK";

}A decorator wraps services to add behaviors such as logging, caching, or retries.

Example: wrap your IAuthService with a decorator that logs logins.

public class LoggingAuthDecorator : IAuthService

{

private readonly IAuthService _inner;

public LoggingAuthDecorator(IAuthService inner) => _inner = inner;

public bool Login(string email, string password)

{

Console.WriteLine("Login attempt");

return _inner.Login(email, password);

}

}How they interact:

- The adapter normalizes the interface (external to internal)

- Decorator enhances the behavior (internal to cross-cutting concerns)

In a microservice, the call chain might look like:

Controller → LoggingAuthDecorator → RetryDecorator → AuthAdapter → LegacyAuthClientWhat .NET engineers should know:

- 👼 Junior: Should understand that Adapters help systems talk to each other, and Decorators add features without changing code.

- 🎓 Middle & 👑 Senior: Should combine both patterns cleanly, using Adapters to isolate dependencies and Decorators to handle cross-cutting concerns

📚 Resources: [RU] GoF Patterns

❓ Describe applying Composite in UI rendering or building tree-structured data.

The Composite pattern allows you to treat individual objects and groups of objects uniformly. It’s perfect for UI trees, menus, or any structure with nested elements.

In UI rendering:

You can model controls, such as buttons, panels, and layouts, using a shared interface and nest them freely.

public interface IUIElement

{

void Render();

}

public class Button : IUIElement

{

public void Render() => Console.WriteLine("Render Button");

}

public class Panel : IUIElement

{

private readonly List<IUIElement> _children = new();

public void Add(IUIElement element) => _children.Add(element);

public void Render()

{

Console.WriteLine("Render Panel Start");

foreach (var child in _children)

child.Render();

Console.WriteLine("Render Panel End");

}

}

// USAGE

var panel = new Panel();

panel.Add(new Button());

panel.Add(new Button());

panel.Render();In tree-like data (e.g., categories, file systems):

public abstract class Node

{

public string Name { get; }

protected Node(string name) => Name = name;

public abstract void Print(int indent = 0);

}

public class File : Node

{

public File(string name) : base(name) { }

public override void Print(int indent) =>

Console.WriteLine($"{new string(' ', indent)}- File: {Name}");

}

public class Folder : Node

{

private readonly List<Node> _children = new();

public Folder(string name) : base(name) { }

public void Add(Node node) => _children.Add(node);

public override void Print(int indent)

{

Console.WriteLine($"{new string(' ', indent)}+ Folder: {Name}");

foreach (var child in _children)

child.Print(indent + 2);

}

}What .NET engineers should know:

- 👼 Junior: Should recognize tree structures and understand how parent-child relationships work in UI or data.

- 🎓 Middle: Should use the Composite pattern to simplify rendering logic or recursive data processing.

- 👑 Senior: Should design recursive, extensible systems using Composite. Knows how to balance performance and maintainability in large trees or deeply nested UIs.

📚 Resources:

❓ Give a case for using a Facade over a direct service approach.

A Facade is useful when you want to provide a simplified, unified interface to a complex system. Instead of calling multiple services directly from your controller or UI, you group them behind a facade.

When Facade helps:

Imagine a checkout process in an e-commerce app.

To place an order, you need to:

- Validate the cart

- Reserve inventory

- Process payment

- Create a shipment

- Send a confirmation email

Calling all these services from the controller directly:

_cartValidator.Validate(cart);

_inventoryService.Reserve(cart);

_paymentService.Charge(card);

_shippingService.CreateOrder(order);

_emailService.SendConfirmation(user);This clutters the controller and tightly couples it to low-level logic.

Using a Facade:

public class CheckoutFacade

{

public void CompleteOrder(Cart cart, PaymentInfo card)

{

_cartValidator.Validate(cart);

_inventoryService.Reserve(cart);

_paymentService.Charge(card);

_shippingService.CreateOrder(cart);

_emailService.SendConfirmation(cart.User);

}

}Now your controller calls:

_checkoutFacade.CompleteOrder(cart, card);Cleaner, testable, and easier to evolve.

When to avoid it? If your service interaction is truly simple or one-off, adding a facade might be unnecessary overhead.

What .NET engineers should know about Facade:

- 👼 Junior: Should understand that Facade wraps multiple operations into a more straightforward method to make code easier to work with.

- 🎓 Middle: Should create Facades to group related service calls and reduce duplication in controllers or UI layers.

- 👑 Senior: Should know when to introduce a Facade to improve maintainability, shield internal changes, and keep layers loosely coupled.

📚 Resources:

- [RU] Facade Pattern

❓ How can Flyweight be used in C#? Can you provide examples of implementation from the .NET?

The Flyweight pattern is all about sharing memory-heavy objects that don’t change often. In C#, it helps when you have a lot of similar objects and want to avoid repeating the same data in memory.

When to use it in your code:

- Lots of value-type-like objects (e.g., coordinates, shapes, letters)

- Large UI trees (e.g., diagram editors, syntax highlighters)

- Read-heavy systems with limited variation

C# Example: Shared text formatting

public class TextStyle

{

public string Font { get; set; }

public int Size { get; set; }

public bool Bold { get; set; }

}

public class StyledCharacter

{

public char Character { get; }

public TextStyle Style { get; }

public StyledCharacter(char character, TextStyle style)

{

Character = character;

Style = style;

}

}Now, 10,000 characters can share the same TextStyle instance, reducing memory use compared to having complete style data per character.

Real-world Flyweight-like examples in .NET:

String.Intern()— Shares identical string literals in memory

var a = String.Intern("hello");

var b = String.Intern("hello");

Console.WriteLine(ReferenceEquals(a, b)); // True- WPF Freezable types (like

Brushes.Red). Brushes, pens, and geometries are reused rather than created every time. Example:

var brush1 = Brushes.Red;

var brush2 = Brushes.Red;

// Both refer to the same shared object- ASP.NET

StaticFileMiddleware, Cached file metadata and content act like flyweights to serve static assets efficiently. Imaging and game engines: Libraries like SkiaSharp or MonoGame utilize flyweight techniques for textures, glyphs, and particles.

What .NET engineers should know:

- 👼 Junior: Should understand that shared objects help reduce memory when many items use the same settings or data.

- 🎓 Middle: Should apply the Flyweight pattern to design memory-efficient models and use built-in sharing features like

String.Intern()or WPF brushes. - 👑 Senior: Should recognize memory bottlenecks in large object graphs and apply Flyweight strategically to reduce allocation pressure and GC churn.

📚 Resources:

❓ Which pattern is used under the hood for Middleware in ASP.NET Core?

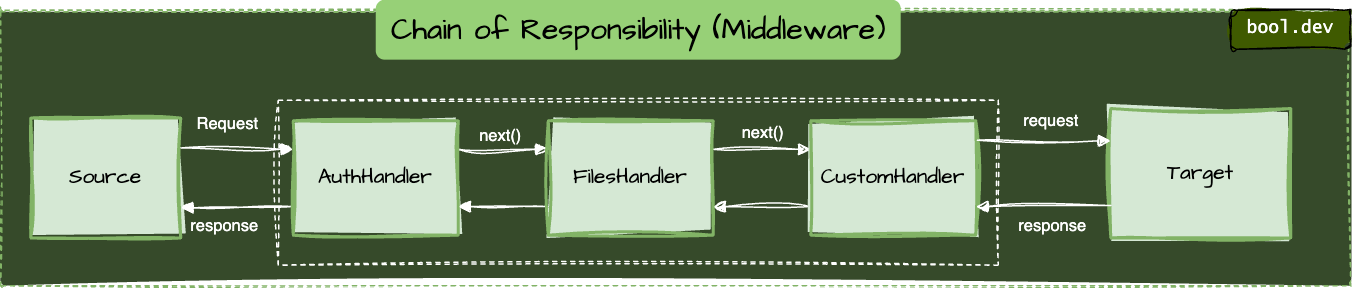

ASP.NET Core middleware is built using the Chain of Responsibility pattern.

This pattern allows a request to pass through a chain of handlers (middlewares), where each handler can:

- Act on the request,

- decide to pass it to the next handler,

- Or stop the chain entirely.

It's a very powerful approach that allows you:

- Each middleware can decide to pass the request further or stop it

- Makes cross-cutting concerns (logging, auth, error handling) modular

- Flexible and composable without hardcoded dependencies between components

Example of Middleware:

public class LoggingMiddleware

{

private readonly RequestDelegate _next;

public LoggingMiddleware(RequestDelegate next) => _next = next;

public async Task InvokeAsync(HttpContext context)

{

Console.WriteLine("Before next middleware");

await _next(context);

Console.WriteLine("After next middleware");

}

}Middleware is added to the pipeline in Program.cs:

app.UseMiddleware<LoggingMiddleware>();

app.UseMiddleware<AuthenticationMiddleware>();

app.UseMiddleware<CustomMiddleware>();They run in order, forming a linked chain, just like the Chain of Responsibility pattern describes.

What .NET engineers should know about Middleware:

- 👼 Junior: Should understand that middleware runs in a sequence and can modify requests and responses.

- 🎓 Middle: Should write custom middleware and understand how it fits into the request pipeline using

next. - 👑 Senior: Should design middleware with clear separation of concerns and performance in mind. Understands edge cases, such as short-circuiting and exception handling.

📚 Resources: ASP.NET Core Middleware

❓ Where is Prototype dangerous in C# due to mutable state trees?

However, in C#, this can be particularly dangerous when the object being cloned has nested mutable references.

Prototype is risky when:

- The object has complex or nested mutable fields (lists, dictionaries, child objects)

- Clones are assumed to be fully independent

- Developers forget that the default clone is shallow (

MemberwiseClone)

The dangerous case: shallow copy + shared references

public class Document

{

public string Title { get; set; }

public List<string> Tags { get; set; } = new();

public Document Clone() => (Document)this.MemberwiseClone();

}

// now clone it

var original = new Document { Title = "Report", Tags = new() { "finance" } };

var copy = original.Clone();

copy.Title = "Copy";

copy.Tags.Add("urgent");Both original and copy now share the same Tags list. Changing one changes the other, which is rarely what you want.

Safer solution: deep copy

public Document Clone()

{

return new Document

{

Title = this.Title,

Tags = new List<string>(this.Tags) // copy the list

};

}This ensures that the internal state is not shared between clones.

What .NET engineers should know:

- 👼 Junior: Should know that cloning objects can lead to shared state and unexpected bugs.

- 🎓 Middle: Should implement deep copies when needed and be cautious with mutable fields inside clones.

- 👑 Senior: Should question whether Prototype is the right tool. May prefer factories, mappers, or copy constructors over cloning if state management is complex.

📚 Resources:

❓ Compare Lazy Singleton with IOptionsMonitor for dynamic config scenarios.

Both Lazy Singleton and IOptionsMonitor<T> are used to manage shared configuration or state, but they serve different purposes in dynamic scenarios.

Lazy Singleton

A lazy singleton delays creation of a single instance until it’s first needed. Once created, it never changes.

public class ConfigService

{

private static readonly Lazy<ConfigService> _instance =

new(() => new ConfigService());

public static ConfigService Instance => _instance.Value;

public string ConnectionString { get; } = LoadFromFile(); // static load

}Lazy Singleton is suitable for:

- Static, immutable configuration

- Avoiding an expensive setup until needed

- Thread-safe shared state

Lazy Singleton is bad for:

- Anything that needs to change at runtime

- Hot-reload or live config updates

IOptionsMonitor<T>

IOptionsMonitor<T> is built for dynamic configuration. It watches config files (like appsettings.json) and updates bound objects when values change.

public class MyService

{

private readonly IOptionsMonitor<MyConfig> _config;

public MyService(IOptionsMonitor<MyConfig> config)

{

_config = config;

}

public void Run()

{

var current = _config.CurrentValue; // reflects latest config

}

}IOptionsMonitor is suitable for:

- Live config reload without restarting the app

- Feature toggles, rate limits, connection strings

- Custom

OnChangesubscriptions for reacting to updates

IOptionsMonitor is bad for:

- Immutable logic or values that must not change after startup

- Hard to reason about the state when it changes under your feet

What .NET engineers should know:

- 👼 Junior: Should know that a singleton holds one value, and

IOptionsMonitorIt is for changing the config at runtime. - 🎓 Middle: Should use

IOptionsMonitorfor settings that may change, and Lazy Singleton for static, costly-to-create resources. - 👑 Senior: Should design config lifecycles wisely—preferring reactive, testable patterns over rigid singletons. Knows when to combine both (e.g., lazy + reloadable proxy).

📚 Resources:

❓ How to select when to use Abstract Factory vs DI and Func<T>?

| Scenario | Use |

|---|---|

| Complex object families, runtime switch | Abstract Factory |

| App-wide services with DI container | Dependency Injection |

| On-demand creation, dynamic behavior | Func<T> or IServiceProvider |

See details below:

Abstract Factory

Use when you need to create families of related objects and switch them at runtime.

public interface IWidgetFactory

{

IButton CreateButton();

ICheckbox CreateCheckbox();

}Practical when object creation involves:

- Multiple variants (e.g., Windows vs Mac UI)

- Complex logic per variant

- Multiple related types that should work together

Cons:

- Overkill for simple object graphs

- Adds extra interfaces and boilerplate

Dependency Injection (DI)

Use when you want to inject a fully configured instance, and don’t need to control creation manually.

public class MyService

{

private readonly IRepository _repo;

public MyService(IRepository repo) => _repo = repo;

}Best for:

- Standard services

- Application-wide configuration

- Managing lifetimes (scoped, singleton, transient)

Cons:

- Less flexible if you need runtime switching or multiple strategies

Func<T> or IServiceProvider

Use when you need to create instances on demand or conditionally.

public class OrderProcessor(Func<IOrderHandler> handlerFactory)

{

public void Process() => handlerFactory().Handle();

}Best for:

- Lazy or dynamic instantiation

- Creating many short-lived instances

- Delayed construction or runtime strategy

Cons:

- Can get messy if misused—avoid overuse of

Func<T>as a poor man's factory

📚 Resources: Dependency Injection in .NET

🏢 Architecture Styles & Modular Design

❓ How could you implement Sidecar proxies for logging/security next to a .NET service?

A Sidecar proxy is a small helper process that runs alongside your leading .NET service. Usually in the same container or pod, and handles cross-cutting concerns, such as logging, security, or traffic routing, without modifying your app code.

Sidecar Use cases for .NET apps:

- Add logging/encryption without changing app code

- Inject headers, trace IDs, or tokens

- Offload auth (JWT validation, mTLS)

- Centralize retry logic and circuit breakers

How to implement Sidecar with a .NET service:

Option 1: Use an existing Sidecar proxy

- Envoy – Works with service meshes like Istio or AWS App Mesh

- NGINX – Can act as a reverse proxy with Lua or sidecar configs

- Linkerd – A Lightweight service mesh that auto-injects sidecars

You run your .NET service and the proxy side-by-side in a Kubernetes pod:

containers:

- name: my-dotnet-api

image: my-api:latest

- name: envoy

image: envoyproxy/envoy

volumeMounts:

- mountPath: /etc/envoy

name: envoy-configThe proxy intercepts traffic before it reaches your app, handling tasks such as logging, authentication, and other functions.

Option 2: Write a custom reverse proxy in .NET

Useful in non-Kubernetes environments or if you want complete control.

Example using YARP (Yet Another Reverse Proxy):

app.MapReverseProxy(proxyPipeline =>

{

proxyPipeline.Use(async (context, next) =>

{

Console.WriteLine($"Request: {context.Request.Path}");

await next();

});

});Your proxy service forwards requests to the real .NET backend and logs, filters, or blocks traffic as needed.

What .NET engineers should know:

- 👼 Junior: A Sidecar proxy is a separate process that helps your app without touching its code.

- 🎓 Middle: Should configure and deploy sidecars with NGINX or Envoy, and understand how they intercept and modify traffic.

- 👑 Senior: Should design services with separation of concerns in mind—choosing when to offload responsibilities to sidecars vs the app, and integrating them cleanly with service meshes.

📚 Resources:

❓ When would you choose Database-per-Service, and how do you coordinate consistency?

Database-per-Service is a microservice architecture pattern in which each service owns its database. This promotes autonomy and firm service boundaries, but also introduces challenges to data consistency.

When to choose Database-per-Service:

- Strong service isolation is needed. Services evolve independently without risking schema conflicts.

- Different services require different storage types. E.g., use PostgreSQL for billing, MongoDB for logging, and Redis for caching.

- Independent scaling and deployment are goals. Services can be scaled or updated without coordinating DB-level changes.

- You want to enforce strict ownership—no direct access to another service’s data, only communication via API or events.

How to coordinate consistency with the DB per service:

Usually, in distributed systems with a Database per service approach, the eventual consistency approach is used. To ensure eventual consistency between services, you can use the following patterns:

- Saga Pattern

- Break a transaction into smaller, local transactions that are coordinated across services. If one step fails, compensating actions roll back the changes.

- Domain Events + Messaging

- Services publish events when their data changes.

- Other services react asynchronously (via message queues like RabbitMQ, Azure Service Bus)

- Outbox Pattern

- Events are written to the same DB as the business change

- A background process reads and publishes them

- Ensures events aren’t lost even if the service crashes

- Idempotency + Retries

- Handle duplicate messages and retry logic to ensure eventual delivery without side effects.

What .NET engineers should know:

- 👼 Junior: Should understand that each service has its database, which avoids tight coupling, but can’t rely on shared queries.

- 🎓 Middle: Should use messaging or events to keep data in sync across services and apply patterns like Outbox and Saga.

- 👑 Senior: Should architect solutions that ensure data consistency across services at scale, design around eventual consistency, and enforce service boundaries at the database level.

📚 Resources:

- Saga distributed transactions pattern

- Saga Orchestration using MassTransit in .NET

- Database-per-service pattern

- Transactional outbox

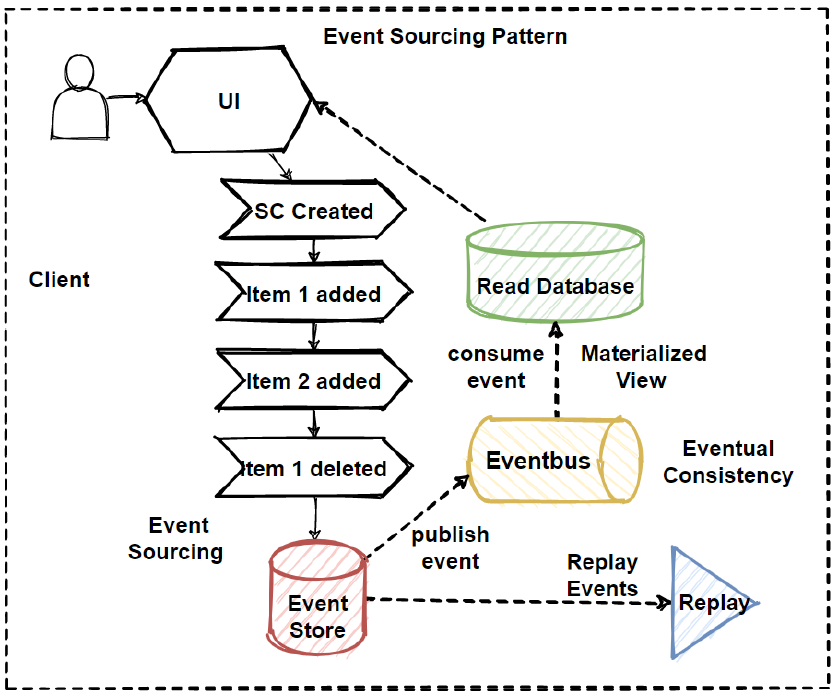

❓ Describe in which scenarios it makes sense to implement the Event Sourcing pattern

Event Sourcing is a pattern where state is not stored as the latest snapshot, but instead as a sequence of domain events. The current state is rebuilt by replaying these events in order.

It’s powerful, but also adds complexity. So it only makes sense when that power is needed.

Use Cases for Event Sourcing

- Complex business rules or rich domain logic

- You need full traceability of how and why the state changed

- Domain decisions depend on past events (not just the current state)

- Auditing and historical tracking are first-class features

- Every change must be recorded with a full history

- "Why did this happen?" needs to be answered

- You need to rebuild the state or project multiple views

- Event streams can be replayed into read models

- Supports CQRS (Command Query Responsibility Segregation)

- Temporal queries are important

- "What did the account balance look like last month?"

- "How did the order change over time?"

- Rollbacks and compensations are better modeled as new events

- No need to mutate state, append a new corrective event

When to avoid event sourcing:

- CRUD apps with simple data and little business logic

- Performance-critical systems where replaying history would be too expensive

- Teams unfamiliar with event modeling, or when audit logs would be enough

What .NET engineers should know about Event Sourcing:

- 👼 Junior: Should understand that Event Sourcing stores a series of changes instead of just the final result.

- 🎓 Middle: Should be able to design simple event models and apply the pattern in areas with complex change history or audit requirements.

- 👑 Senior: Should be able to architect event-sourced systems with clear aggregates, projections, and versioning strategies. Should know how to implement event sourcing property, including using existing tools such as EventFlow.

📚 Resources:

❓ Which patterns could be used for decomposing a monolith into macro/micro services?

The goal of splitting a monolith into macro and microservices is to reduce risk, isolate responsibilities, and allow services to evolve independently.

Multiple patterns can guide this process:

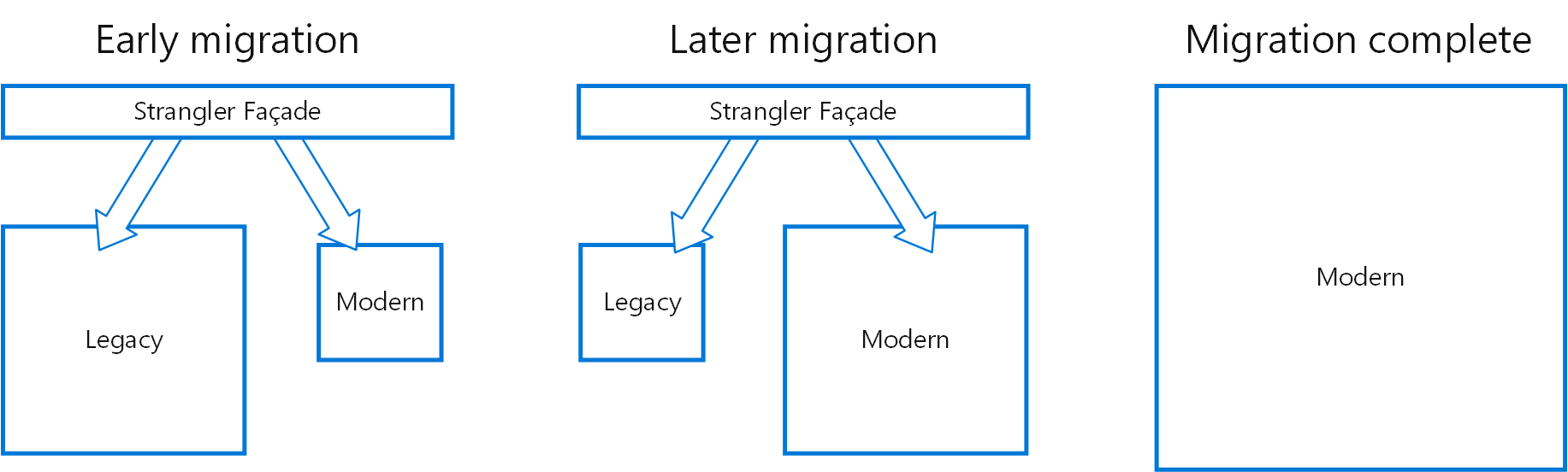

Strangler-Fig Pattern

- Gradually replace monolith functionality by routing specific endpoints to new services.

- Keeps the system running while parts are rewritten

- Ideal for minimizing risk during migration

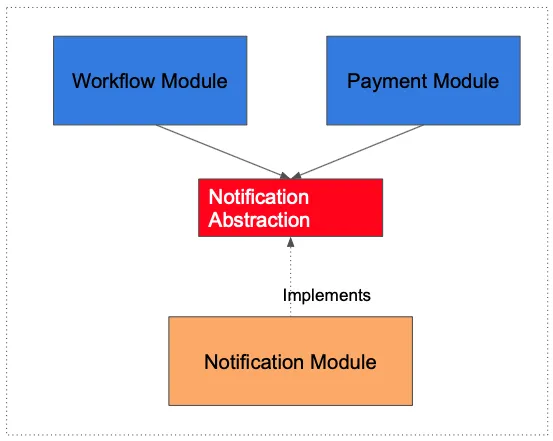

Branch by Abstraction Pattern

- Introduce an abstraction over the existing logic

- Implement new behavior behind the abstraction without changing callers

- Once stable, remove the old path and keep only the new service-backed one

- Useful when migrating shared libraries or service boundaries

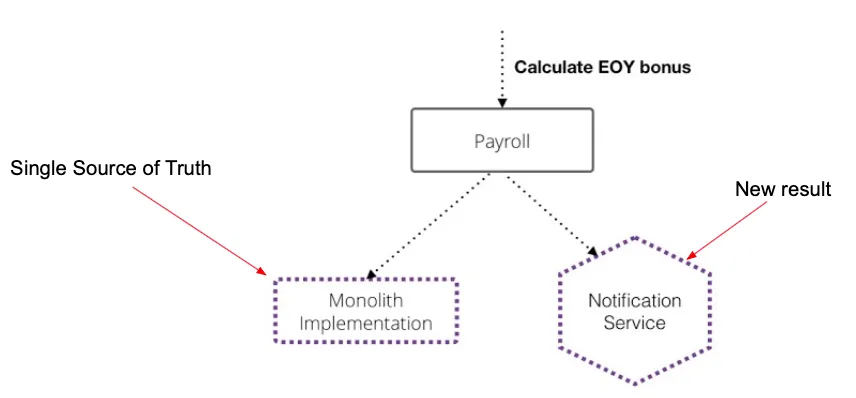

Decorating collaborator pattern

- Wrap an existing component (from the monolith) with a decorator that adds new logic.

- You can route traffic to both the old and new implementations for comparison.

- Helps with gradual replacement or behavior verification

Parallel Run Pattern

- Run both the old and new implementations side by side

- Compare outputs to ensure functional parity

- Great for migrations with high correctness requirements (e.g., billing, accounting)

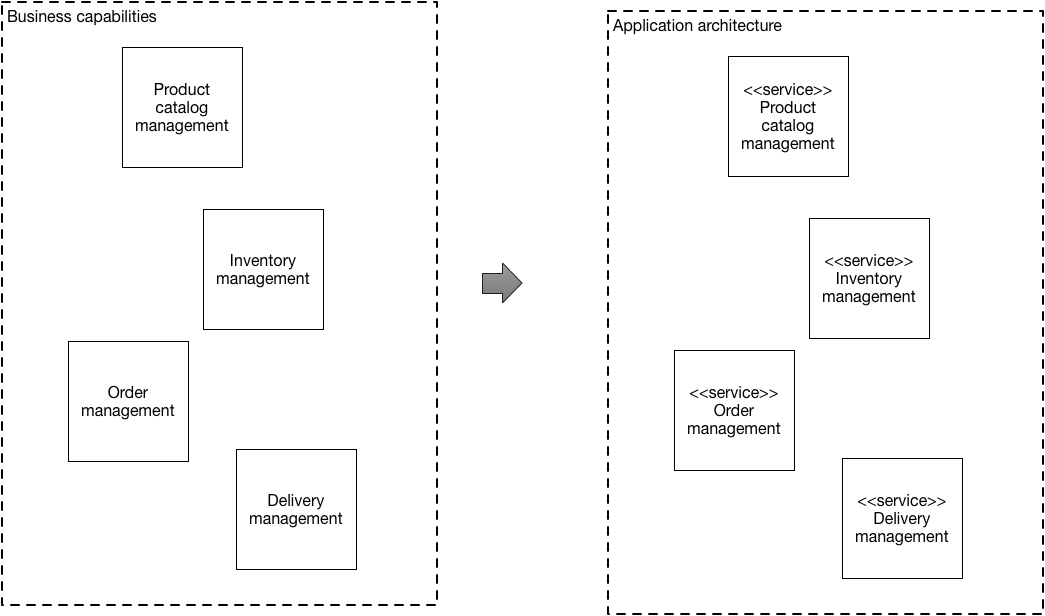

Decompose by Business Capability

- Identify logical business areas (e.g., Orders, Payments, Customers)

- Extract each as a self-contained service with its database and logic

- Aligns well with team ownership and Conway’s Law

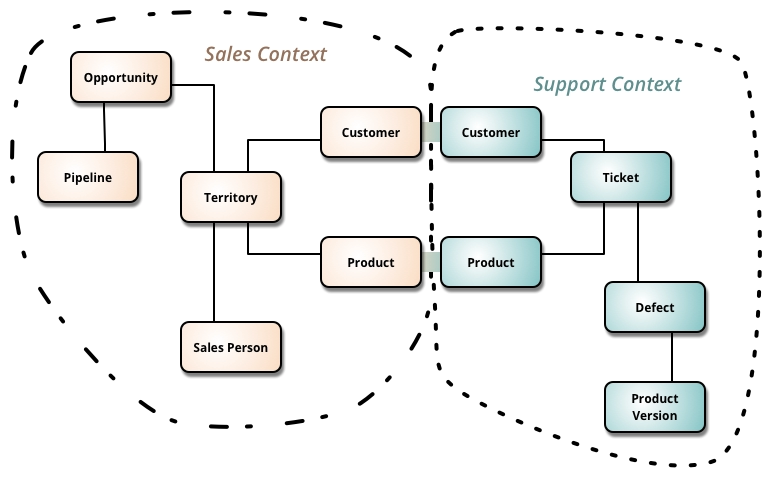

Bounded Context (DDD)

- Split the system based on domain language and context

- Each bounded context becomes a service with clear contracts

- Avoids model confusion and overcoupling

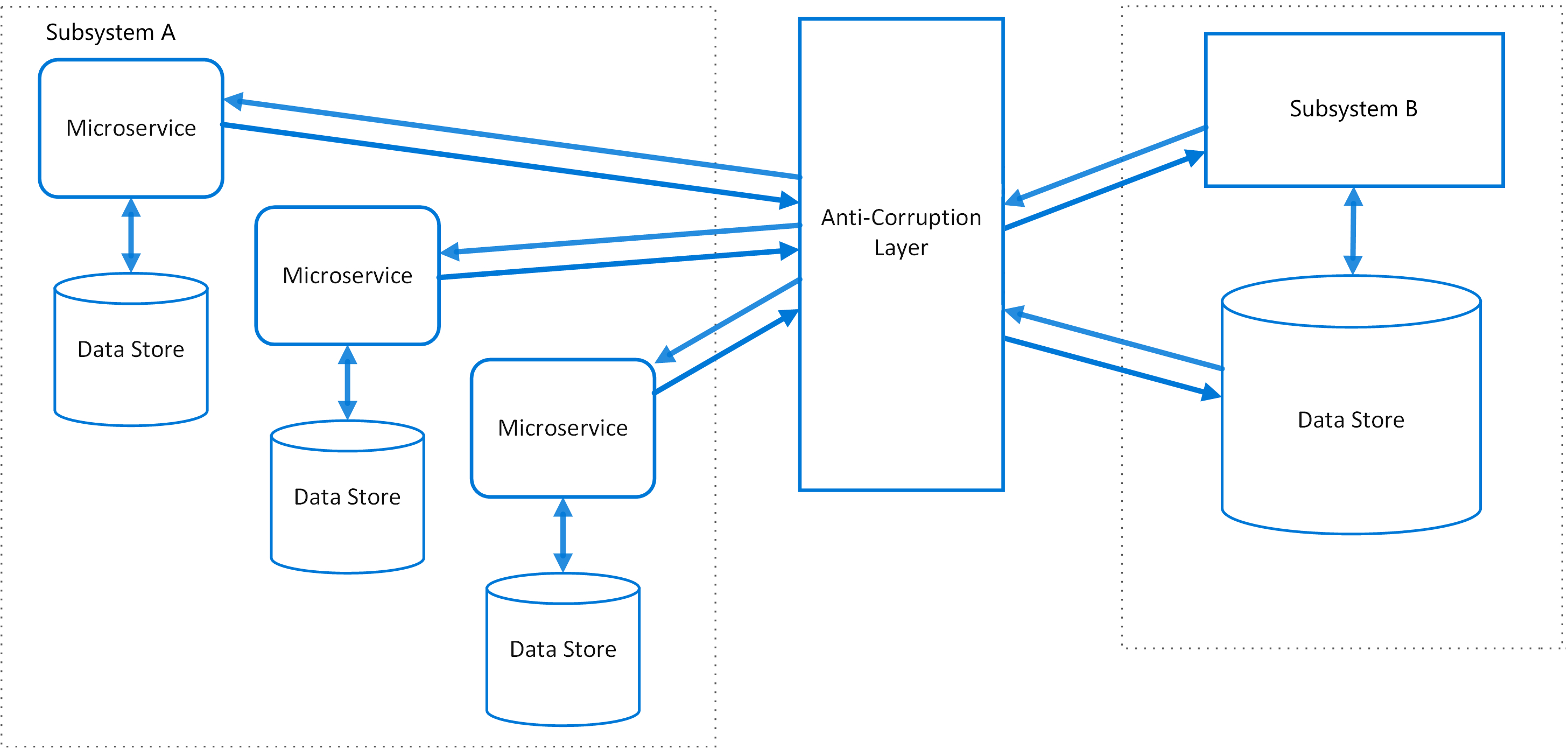

Anti-Corruption Layer (ACL)

- Protects new services from legacy models by translating between systems

- Useful when a new service depends on monolithic logic or data

- Helps decouple without rewriting everything at once

Event-Driven Architecture

- Publish domain events from the monolith

- Other services can subscribe and react

- Enables async integration and reduces direct dependencies

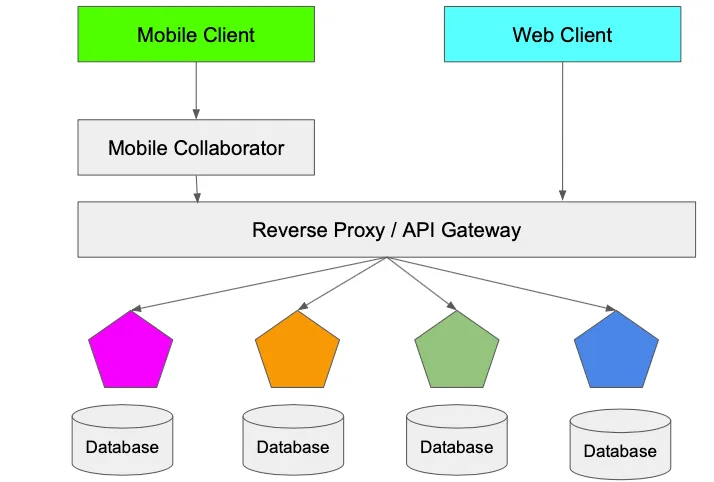

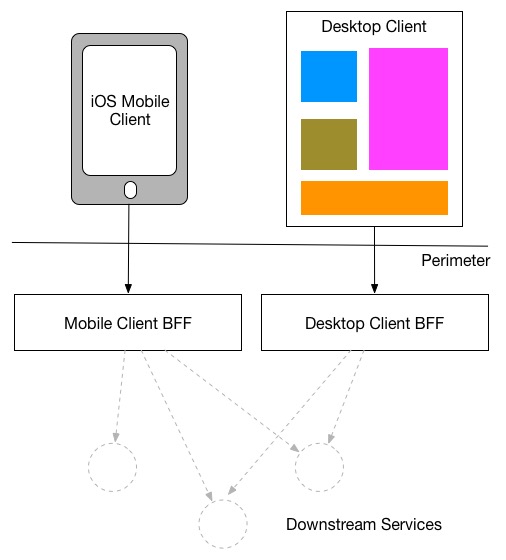

Backend for Frontend (BFF)

- Create separate service layers optimized for specific frontend apps (e.g., web, mobile)

- Allows independent evolution of backend logic and UI concerns

- Great during phased migration with new frontends

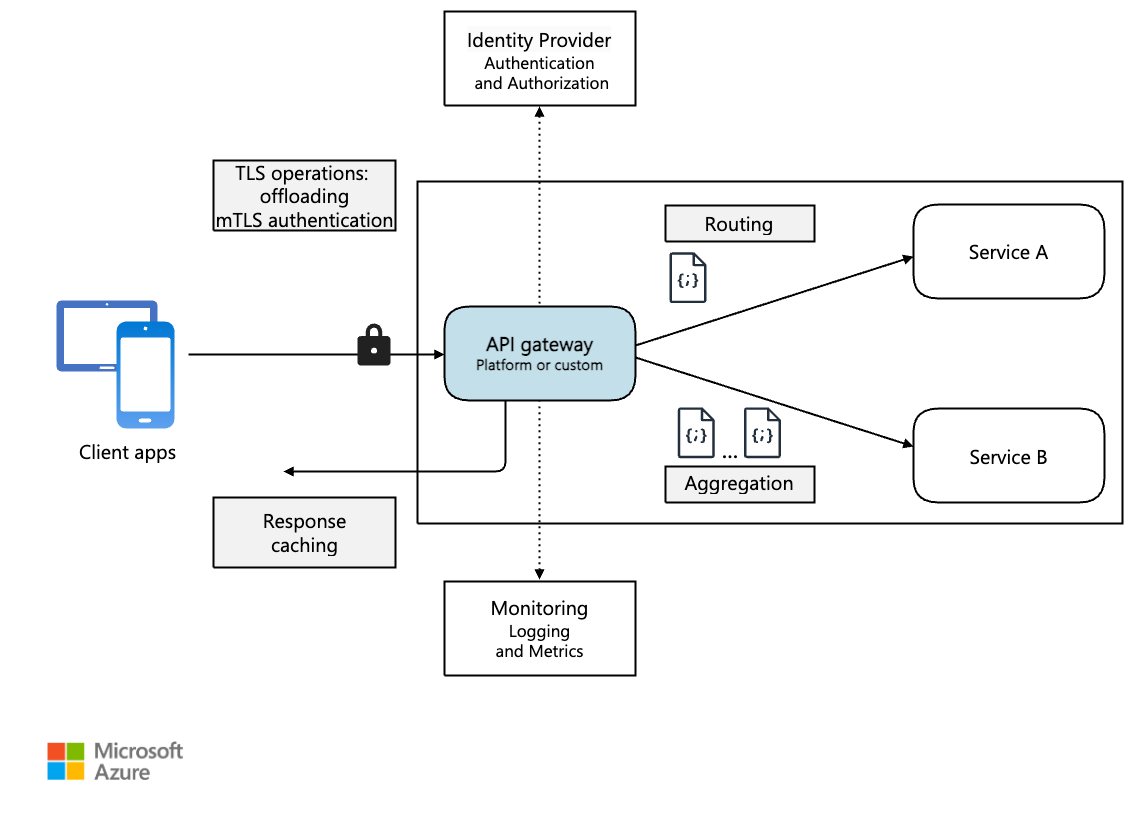

API Gateway

- Central entry point that routes to both the monolith and microservices

- Helps keep the migration invisible to clients

- Supports transformation, auth, and rate limiting centrally

What .NET engineers should know:

- 👼 Junior: Should understand that decomposing a monolith is a gradual process using clear, structured patterns.

- 🎓 Middle: Should apply patterns like Strangler, ACL, and BFF to extract services safely while keeping the system stable.

- 👑 Senior: Should lead decomposition strategy using DDD, event-driven design, and architectural isolation. Mixing patterns can help balance speed, risk, and team capacity.

📚 Resources:

- [RU] Cheat Sheet for Migrating Monolith to Microservices

- Strangler Pattern

- Top 10 Software Architecture Styles You Should Know

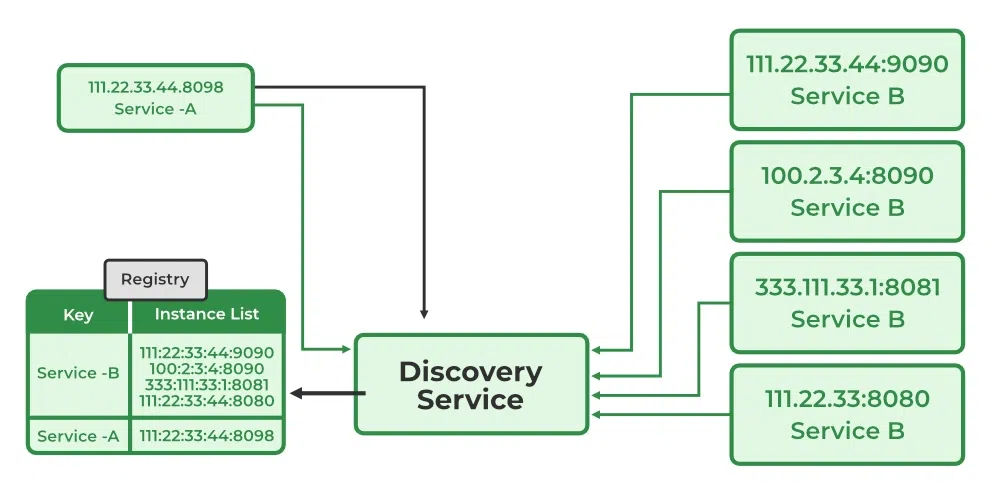

❓ Have you had experience with Service Discovery (e.g., via Consul)?

Service Discovery solves the problem of locating services dynamically in distributed systems, allowing services to communicate with each other without hardcoding IP addresses or URLs.

Tools like Consul, Eureka, or Kubernetes DNS provide dynamic registration and lookup.

✅ What Service Discovery does:

- Registers services as they start up (e.g.,

order-serviceregistered athttp://10.1.1.7:5000) - Resolves service names at runtime via DNS or HTTP API

- Supports health checks and removes failed instances

- Works with load balancers to spread traffic automatically

💡 How it's commonly used in .NET apps:

- Register services at startup using a Consul agent or SDK

- Use service names like

http://order-serviceinstead of hardcoded URLs - With Polly or YARP, combine service discovery, retries, and load balancing

- Optionally use sidecars or reverse proxies (like Envoy) to abstract discovery from app code

What .NET engineers should know:

- 👼 Junior: Should understand that Service Discovery allows services to find each other without hardcoded addresses.

- 🎓 Middle: Should configure and use tools like Consul or Kubernetes DNS for dynamic lookup in development and production.

- 👑 Senior: Should architect systems around Service Discovery, choosing between client-side vs server-side discovery, and integrating with service meshes or custom policies for retries, health, and observability.

❓ How would you design a Rate Limiter pipeline using ASP.NET Core’s RateLimiter middleware?

ASP.NET Core provides built-in RateLimiter middleware to help protect your APIs from overuse, abuse, or accidental overload. You can configure fine-grained rate limits at the endpoint or pipeline level, without relying on external tools.

Which goal do we want to achieve with the rate limiter?

- Limit how many requests users or clients can make per time window

- Return

429 Too Many Requestswhen limits are hit - Apply limits based on IP, user ID, API key, or route

- Optionally queue or delay requests before rejecting

Steps to add Rate Limiter:

1. Add the middleware to the program.cs

builder.Services.AddRateLimiter(options =>

{

options.AddPolicy("FixedWindow", httpContext =>

RateLimitPartition.GetIpPolicy(httpContext, ip =>

new FixedWindowRateLimiterOptions

{

PermitLimit = 100, // 100 requests

Window = TimeSpan.FromMinutes(1), // per minute

QueueProcessingOrder = QueueProcessingOrder.OldestFirst,

QueueLimit = 0

}));

});2. Add the middleware to the request pipeline:

app.UseRateLimiter();3. Apply the policy to routes:

app.MapGet("/api/data", async () => "OK")

.RequireRateLimiting("FixedWindow");You can also create multiple policies for authenticated users, anonymous IPs, or specific endpoints.

What .NET engineers should know:

- 👼 Junior: Should understand what rate limiting is and know it prevents overloading the app.

- 🎓 Middle: Should configure rate limit policies using middleware, partition keys, and proper limit strategies.

- 👑 Senior: Should design rate limits per consumer type (anonymous vs. partner API) and handle abuse patterns, logging, and observability.

📚 Resources:

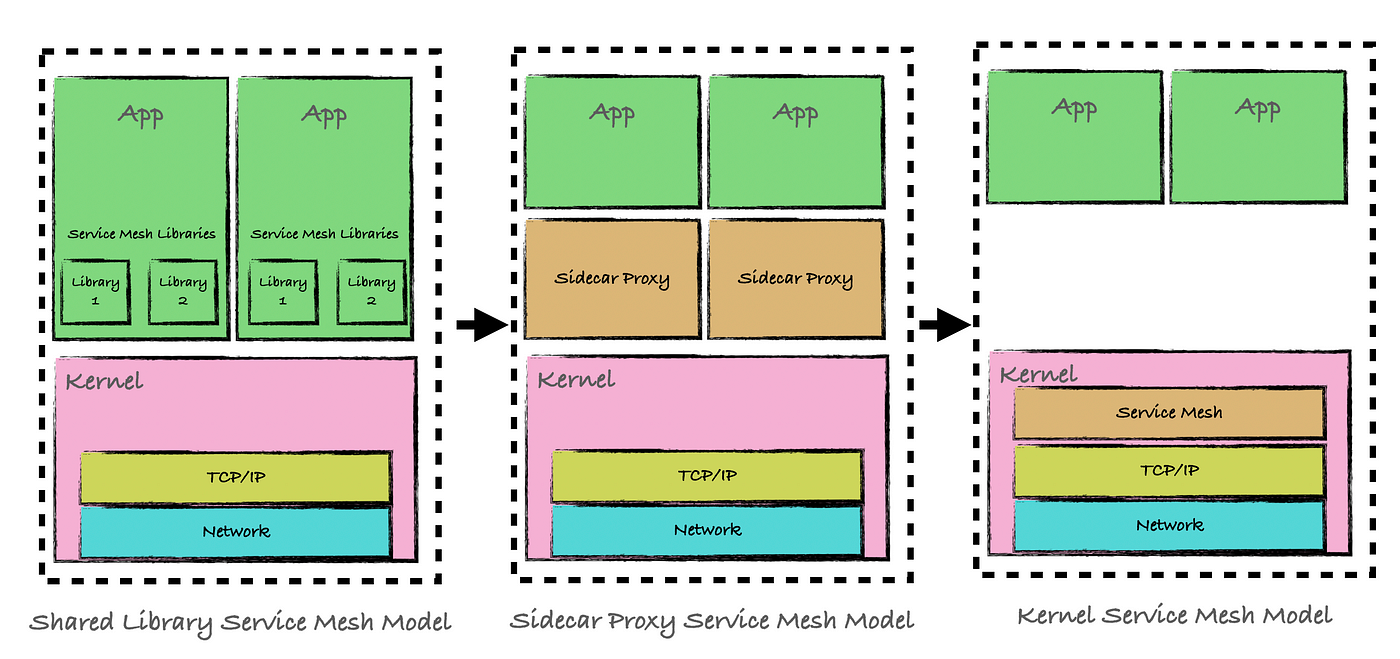

❓ Compare Sidecar vs Service Mesh. When does complexity pay off?

Sidecar and Service Mesh are both architectural patterns that move infrastructure concerns (like traffic routing, security, and observability) out of your main application code—but they differ in scope and complexity.

Sidecar Pattern

A sidecar is a helper process that runs next to your service, often in the same container or pod.

Responsibilities:

- TLS termination

- Request logging

- Local caching or proxying

- Auth token injection

- Your app controls the logic and configuration.

When to use:

- You need specific cross-cutting features (e.g., custom logging, local proxy)

- You want lightweight isolation, not full-blown mesh

- Simpler setups without centralized control

Service Mesh

A Service Mesh builds on the sidecar model—but adds orchestration, observability, and security policies across all services.

Key capabilities:

- mTLS and zero-trust networking

- Traffic shaping (canary, retries, circuit breakers)

- Centralized control plane (e.g., Istio Pilot)

- Built-in telemetry (metrics, logs, traces)

- Service-to-service communication is fully managed without app code changes.

When to use:

You run many microservices across teams or environments

- You need policy-based routing, retries, and failovers

- You want zero-trust networking with automatic TLS and identity

When complexity pays off:

- Your team operates at scale: 10+ services, multiple teams

- You need fine-grained control over communication

- You're adopting zero-trust security

- You want platform-level observability and routing logic without touching app code

- If you're running a handful of services, a sidecar proxy such as Envoy or YARP may be sufficient.

What .NET engineers should know:

- 👼 Junior: Should understand that sidecars can help with things like logging or retries without changing app code.

- 🎓 Middle: Should know how to integrate sidecars or configure basic service mesh features (like mTLS or retries).

- 👑 Senior: Should decide when a mesh makes sense and lead its adoption. Can balance complexity vs. benefits and handle policy management, observability, and platform concerns.

📚 Resources:

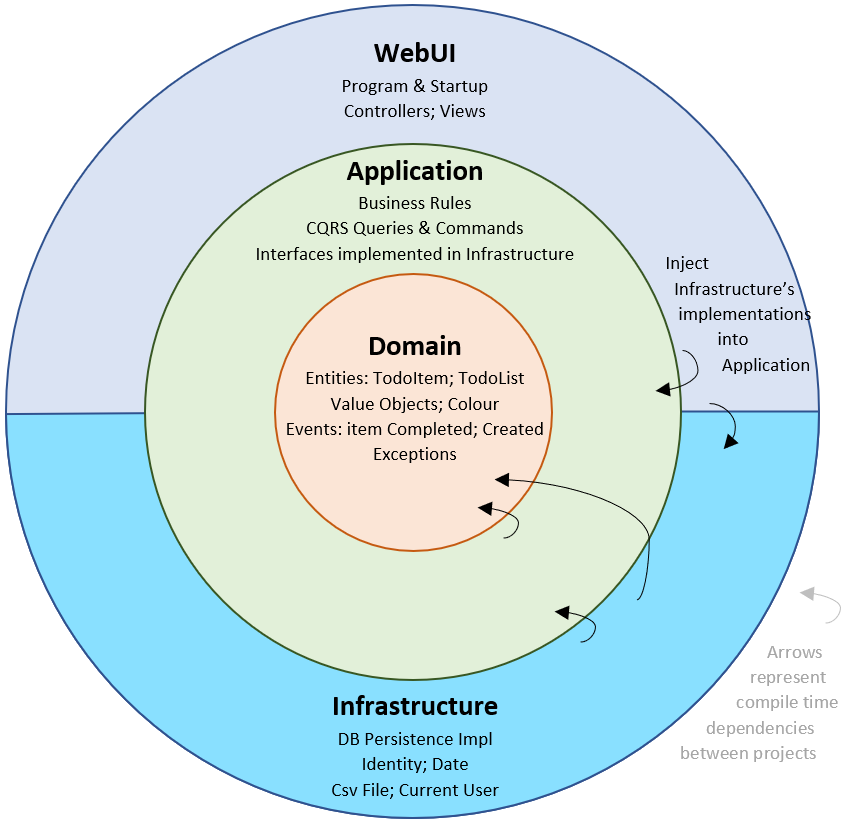

❓ Which type of project structure do you use to organize a .NET project?

Each structure solves different problems. Choose based on domain complexity, team size, and delivery goals.

Most popular of them:

- Clean Architecture

- Feature Folder Structure

- Vertical Slice Architecture

- N-Tier Architecture

- Onion / Hexagonal Architecture

Clean Architecture

Proposed by Robert C. Martin (also known as Uncle Bob), Clean Architecture aims to decouple business logic from frameworks. It enforces strict layer boundaries—outer layers depend on inner ones, never the reverse. This improves testability and long-term maintainability.

Pros:

- Strong separation of concerns

- Framework-agnostic, testable, maintainable

Best for:

- Domain-driven systems

- Large teams, long-lived apps

Typical folder structure:

/src

/Application ← business logic (CQRS, services, interfaces)

/Domain ← core models, entities, enums, rules

/Infrastructure ← EF, external APIs, auth providers

/WebAPI ← controllers, DI setupFeature Folder Structure

This structure organizes files by user-facing features rather than layers. It’s especially useful for frontend/backend apps where features can be developed in isolation.

Pros:

- Flat and intuitive

- Fast onboarding for devs

- Good for UI-driven apps

Best for:

- Web apps or APIs with distinct screens/features

- Small to mid-size projects

Typical folder structure:

/Features

/Login

- Controller.cs

- ViewModel.cs

- Service.cs

/Dashboard

/Reports

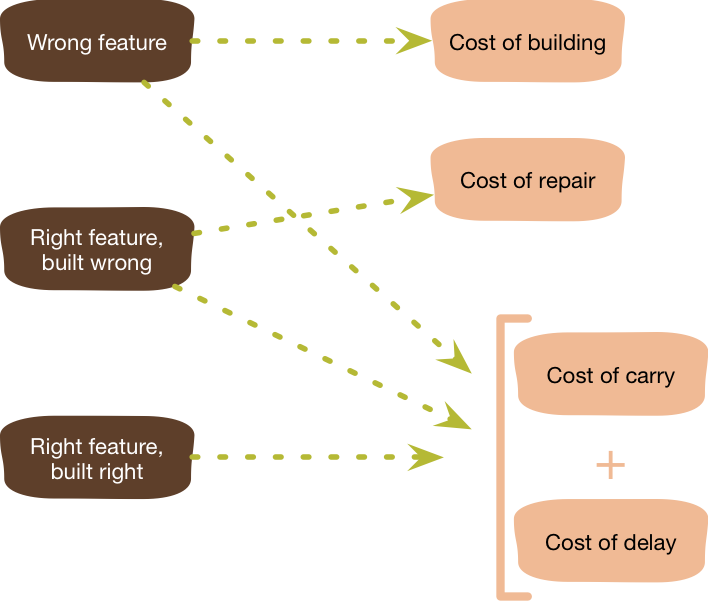

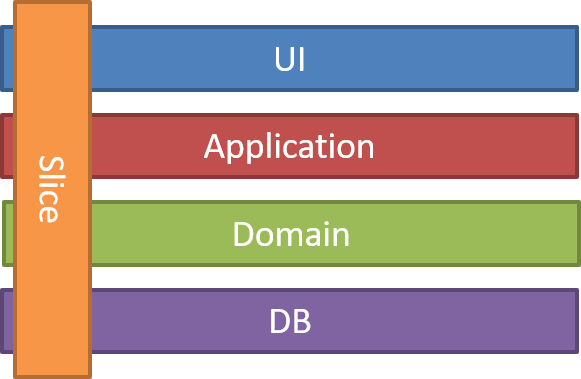

Vertical Slice Architecture

Vertical Slice Architecture was first proposed by Jimmy Bogarde in 2018. Jimmy is also the author of libraries such as MediatR and AutoMapper.

Vertical Slice Architecture is an approach to code organization in which an application is divided into independent functional slices. Each slice includes all the necessary components to implement a specific function, from the user interface to the database. Unlike traditional layered architectures, which divide layers by technical aspects, vertical architecture organizes code around business features or user scenarios.

Vertical slice architecture

Pros:

- Feature-based organization

- Low coupling, testable

- Fast to develop and maintain

Best for:

- Modular APIs

- CQRS apps with many use cases

Typical folder structure:

/src

/Features

/CreateOrder

- Command.cs

- Handler.cs

- Validator.cs

/GetOrder

- Query.cs

- Handler.cs

/Infrastructure

/WebAPI

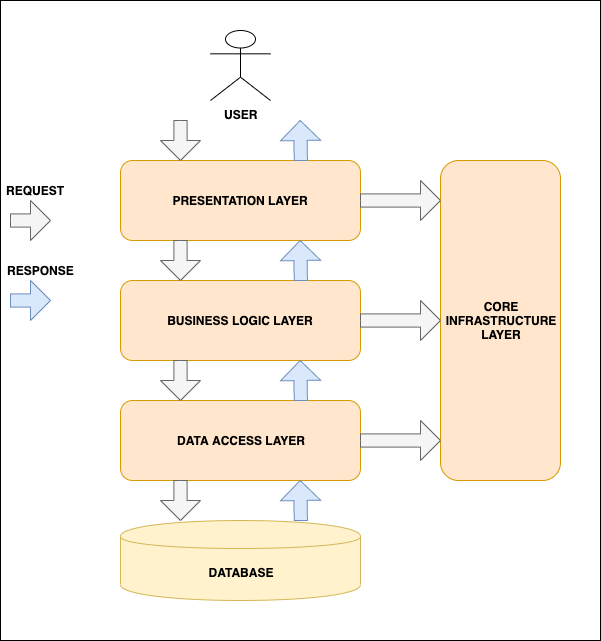

N-Tier Architecture

A classic layered architecture that separates an app by technical roles: UI, business logic, and data access. Still common in many teams due to its simplicity and familiarity.

Pros:

- Familiar, simple

- Easy onboarding

Best for:

- CRUD apps

- Internal tools, small teams

Typical folder structure:

/src

/Presentation ← UI or API layer

/Business ← services, logic

/DataAccess ← EF, SQL logic

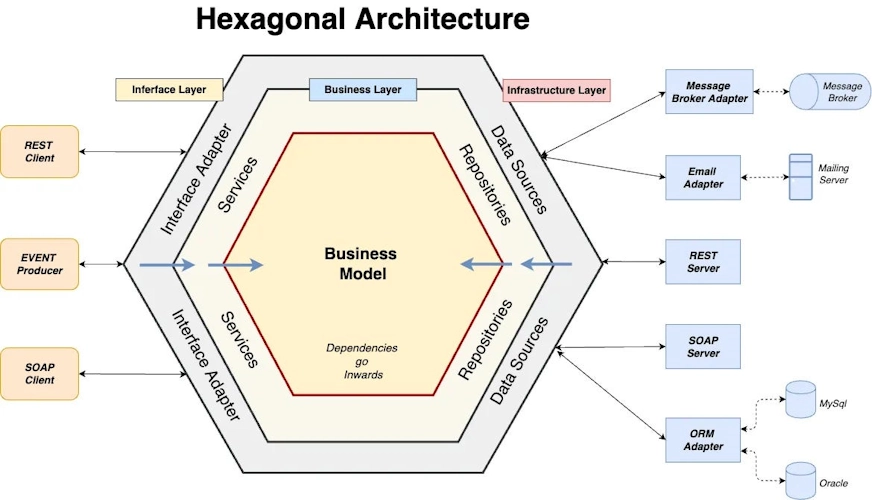

/Common ← shared models, utilitiesOnion / Hexagonal Architecture

These layered designs emphasize a domain-centric core with ports (interfaces) and adapters (implementations) wrapping around it. The app’s core doesn’t know about infrastructure—it’s fully decoupled.

Pros:

- The core domain is isolated

- Ports/adapters around it

- Flexible and test-friendly

Best for:

- Core-focused, domain-rich systems

- Teams aiming for long-term maintainability

Typical folder structure:

/src

/Core ← domain + interfaces

/Application ← use cases

/Infrastructure ← DB, APIs, messaging

/WebAPI ← UI / REST layerWhat .NET engineers should know:

- 👼 Junior: Start with N-Tier or Feature Folders for a simpler structure.

- 🎓 Middle: Move toward Vertical Slice or Clean Architecture to improve testability and separation.

- 👑 Senior: Design modular systems with domain boundaries, layering, and clear ownership. Mix patterns as needed.

📚 Resources:

- The Clean Architecture

- Vertical Slice Architecture

- N-tier architecture style

- Understanding Hexagonal, Clean, Onion, and Traditional Layered Architectures: A Deep Dive

- Common web application architectures

❓ Compare Vertical Slice Architecture with Feature-Folder

Both Vertical Slice Architecture and Feature Folder Structure organize code around features rather than technical layers, but they differ in their structure, patterns, and goals.

| Feature | Vertical Slice | Feature Folder |

|---|---|---|

| Focus | One action per slice (CQRS) | One screen/module per folder |

| Command/Query separation | Yes | No (optional) |

| Use of MediatR / handlers | Often (standard practice) | Rare (optional) |

| Structure depth | Deeper, with validation/logic split | Flatter, more tightly grouped |

| Best for | Backend APIs, business logic flows | UI apps, MVC apps, simple APIs |

What .NET engineers should know:

- 👼 Junior: Should understand that both styles group code by functionality, not by layer.

- 🎓 Middle: Should choose Feature Folder for simplicity, or Vertical Slice for testable, CQRS-driven APIs.

- 👑 Senior: Should architect projects using Vertical Slice when commands and queries grow complex, and Feature Folders when working with UI-first or lightweight applications.

❓ In a green-field project, when is a monolith preferable to microservices, and what hard limits eventually force a split?

Starting with a monolith is often the better choice for a new project. It’s simpler to build and deploy when the team is small and the domain is still in its early stages of development.

✅ When a monolith is preferable:

- Small team size. Coordinating multiple services adds operational overhead that small teams don’t need early on.

- Unclear domain boundaries make it challenging to design proper service boundaries before understanding the problem space. Premature slicing = tight coupling.

- Low operational maturity. Microservices require monitoring, tracing, retries, CI/CD, and infrastructure tooling—if you don’t have those, a monolith is safer.

- Single deployment target. If your system is primarily backend and frontend for one platform, service decomposition may not yield significant benefits yet.

- Speed matters early. You can move faster without inter-service contracts and async messaging to manage.

🚨 Signals to split monolith:

- Scaling bottlenecks. Some parts of the system need to scale independently (e.g., reporting, real-time feeds, background jobs)

- Teams are stepping on each other during deploys. One broken module delays all features

- Team autonomy. Multiple teams want to own, deploy, and scale their parts independently

- High-risk modules: you need isolation around things like payments, auth, or external integrations to reduce blast radius

- Some domains may benefit from different runtimes (e.g., Node.js for frontend, .NET for billing)

Practical approach:

- Start with a modular monolith, cleanly structured with clear boundaries (e.g., per domain or module), but deployed as a single unit.

- Then extract microservices incrementally as the limits above are reached.

What .NET engineers should know:

- 👼 Junior: Should understand that a monolith is not evil. It’s often the best starting point.

- 🎓 Middle: Should structure code for modularity (e.g., Clean Architecture, Modular Monolith) to keep future extraction cheap.

- 👑 Senior: Should anticipate when a split is necessary, based on org/team growth, scaling patterns, or domain boundaries. Leads the move using the strangler pattern, service templates, and clear contracts.

📚 Resources:

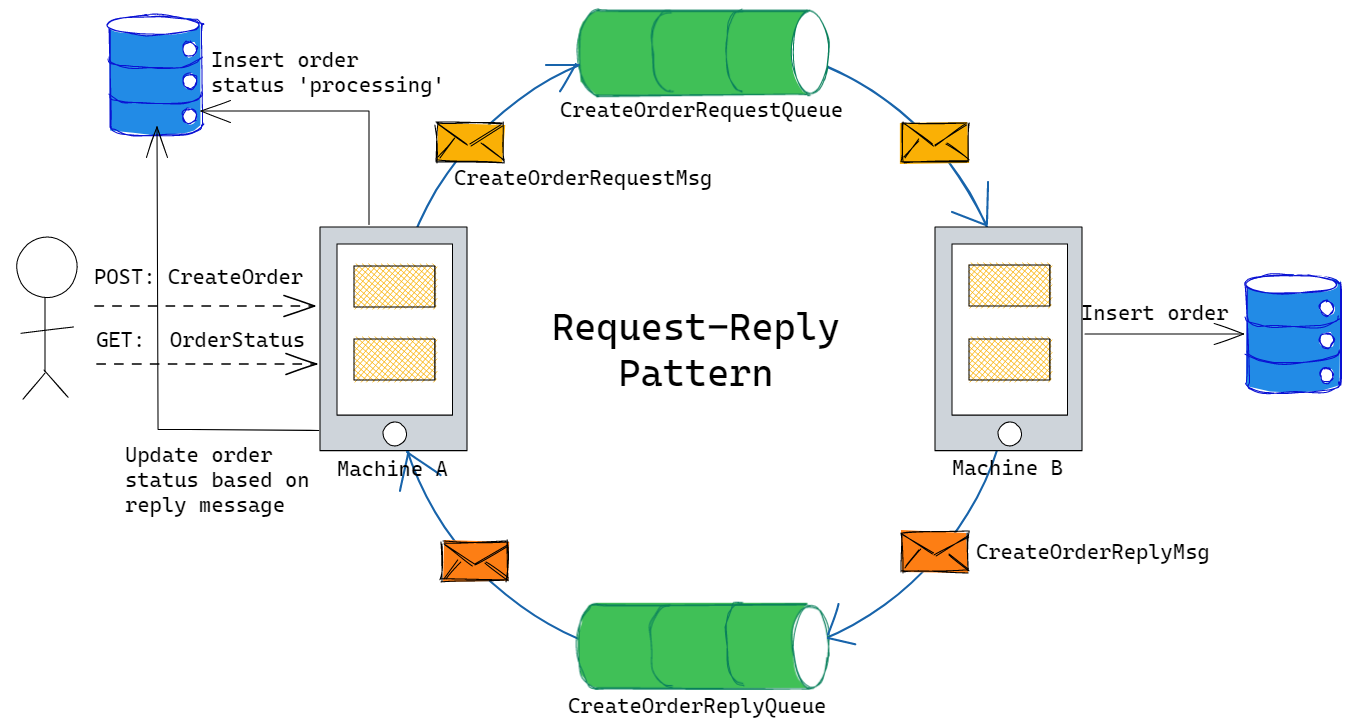

❓ How to handle the request/reply pattern in EDA architecture?

Event-Driven Architecture (EDA) is well-suited for decoupling and scalability. Still, it’s not naturally designed for request/reply (i.e., synchronous, where one service asks another and waits for a response).

Still, you can support this pattern — just be mindful of consistency and responsiveness.

Here are common approaches:

1. Avoid it when possible

In EDA, it’s better to rethink whether a request/reply is needed. Eventual consistency or local caching often works better. But sometimes you need a response. Then: consider options 2-5

2. Use correlation IDs

- Service A sends a

RequestXEventwith a uniqueCorrelationId - Service B handles it and replies with

ResponseXEventusing the sameCorrelationId - Service A listens for the reply, matching it to the original request

// Service A publishes

await _bus.PublishAsync(new GetCustomerRequestEvent(customerId, correlationId));

// Service A waits for response event with matching CorrelationId3. Use reply queues or topics

- Some brokers support dedicated reply channels or temporary queues.

- Include a

ReplyToproperty in the message - Consumer (Service B) sends the response to that channel

- Requestor (Service A) listens and matches it

4. Use gRPC/HTTP for sync calls inside a bounded context

- When a strict request/reply is necessary and latency matters, it’s OK to fall back to REST or gRPC between services, but:

- Keep it within a bounded context

- Avoid chaining calls (no service A → B → C → D …)

5 . Consider a hybrid architecture

For read-heavy scenarios, cache the data locally using:

- Materialized views

- Read models updated via integration events

👼 Junior: Should understand that EDA prefers async messaging and eventual consistency. Be aware that a request/reply can disrupt that model.

🎓 Middle: Expected to implement correlation IDs, reply topics, and fallback sync calls. Understand the trade-offs.

👑 Senior: Should decide when request/reply is justified, design fault-tolerant flows with timeouts, deduplication, and idempotency. Can integrate retry, backoff, or even circuit breaker patterns for resilience.

📚 Resources:

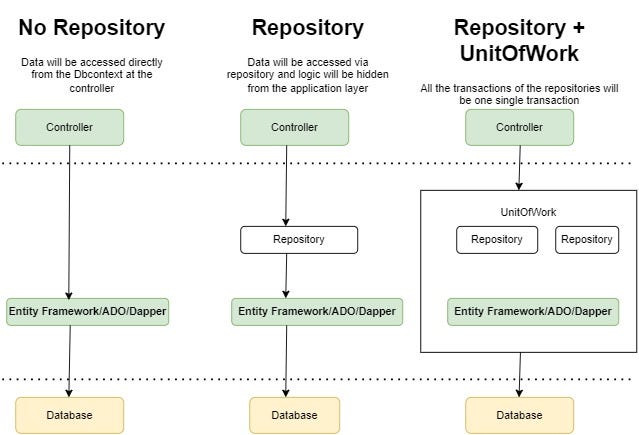

❓ What are the pros and cons of combining UnitOfWork + Repository + CQRS?

Combining Unit of Work, Repository, and CQRS patterns is common in .NET apps, but it’s essential to know when and how to apply them.

The UnitOfWork manages database transactions, tracking changes, and committing them as a single unit of work. The Repository abstracts data access logic, while CQRS separates write (command) and read (query) operations into distinct models.

Pros:

- Clean architecture and clear separation of concerns.

- Easy to test due to clear boundaries and abstractions.

- Easier management of complex transactions through UnitOfWork.

- Improved readability and maintainability by splitting reads and writes explicitly.

- Flexibility in scaling and optimizing separate paths (e.g., read database replicas).

Cons

- It can introduce unnecessary complexity in simple CRUD applications.

- Risk of over-abstraction leading to redundant code.

- Potential performance overhead from additional layers.

- A higher entry barrier for newcomers to understand the system.

Code example:

// Simplified example combining patterns:

public class UnitOfWork : IUnitOfWork

{

private readonly DbContext _context;

public UnitOfWork(DbContext context) => _context = context;

public IRepository<User> UserRepository => new Repository<User>(_context);

public async Task CommitAsync() => await _context.SaveChangesAsync();

}

public interface IUserRepository : IRepository<User>

{

Task<User> GetUserByEmailAsync(string email);

}

public class GetUserQueryHandler : IRequestHandler<GetUserQuery, User>

{

private readonly IUserRepository _repository;

public GetUserQueryHandler(IUserRepository repository)

=> _repository = repository;

public async Task<User> Handle(GetUserQuery request, CancellationToken cancellationToken)

=> await _repository.GetUserByEmailAsync(request.Email);

}What .NET engineers should know:

- 👼 Junior: Should understand the basic purpose of each pattern individually (UnitOfWork, Repository, CQRS), and be able to use them in straightforward scenarios.

- 🎓 Middle: Expected to recognize when to combine these patterns effectively, understand the trade-offs, and be comfortable creating structured solutions using them.

- 👑 Senior: Should have deep knowledge of when and why to avoid unnecessary complexity. Able to architect and optimize systems considering scalability, maintainability, and performance, applying these patterns judiciously.

📚 Resources:

❓ Can you describe the "Outbox Pattern" and the problem it solves

The Outbox Pattern solves a classic problem in distributed systems: ensuring data consistency between your database and a message broker (such as RabbitMQ, Kafka, or Service Bus).

The problem appears when you need to save data to a database and publish an event to notify other systems — and both must happen reliably.

If the database write succeeds but the message publish fails (or vice versa), you end up with an inconsistent state.

What problem does it solve:

- Prevents event loss and duplication when coordinating between DB and message queues.

- Ensures atomicity — data and event state are always in sync.

- Works even if the system crashes between DB write and message publish.

What .NET engineers should know:

- 👼 Junior: Should know it ensures reliable event publishing alongside database writes.

- 🎓 Middle: Should understand how to store events in an outbox table and use background workers to dispatch them.

- 👑 Senior: Should implement full resilience — idempotent handlers, deduplication, and integration with message buses.

📚 Resources: Revisiting the Outbox Pattern